TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities and articles.

TOGETHER WITH BRIGHT DATA

74% of Companies Are Scaling AI with Real-Time Web Access

Scaling AI shouldn’t be slowed down by blocks, downtime, or slow processes. Manual fixes and unreliable public web data put a cap on what your automations and AI agents can achieve.

Bright Data provides seamless, real-time access to public web data even from challenging sites, guaranteeing a continuous pipeline for your models and agents. Your automations run, your AI trains on live data, and your teams stay focused on innovation and growth, not troubleshooting.

Companies using Bright Data are already scaling their products and achieving real ROI with public web access at scale. Move at speed and scale with Bright Data.

IN TODAY'S EDITION

🧠 Use Case

Why On Prem Is a Must Skill for DevOps Engineers

👀 Remote Jobs

Greptile is hiring a Support Engineer - DevOps

Remote Location: Worldwide

GitLab is hiring a Manager, Product Security Engineering

Remote Location: Worldwide

📚 Resources

If you’re not a subscriber, here’s what you missed last week.

To receive all the full articles and support TechOps Examples, consider subscribing:

🧠 USE CASE

Why On Prem Is a Must Skill for DevOps Engineers

Basecamp, a well known project management software company, made headlines with the statement:

We’ve run extensively in both Amazon’s cloud and Google’s cloud, but the savings never materialized. So we’ve left.

AND they pressed 👇️

Initially, it sounded like an exaggerated reciprocation, even some cloud gurus saw this isn’t possible, even if cost is saved, performance may be a trade off for cost.

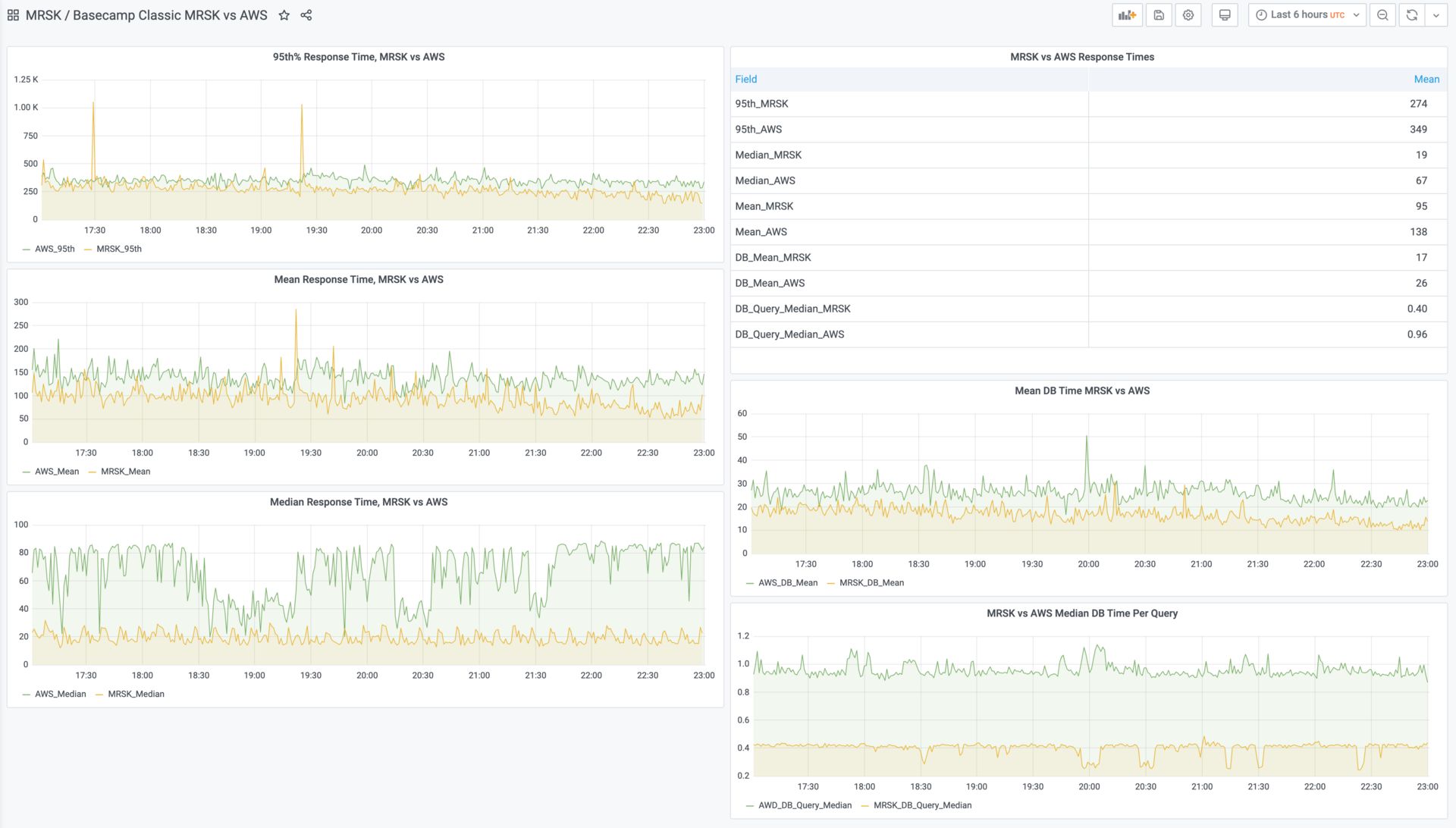

But soon, Basecamp silenced the doubts by publishing astonishing evidence of performance improvement after their cloud exit:

credit: David Heinemeier Hansson

Here’s the summary:

Median Request Time: 19ms (from 67ms)

Mean Request Time: 95ms (from 138ms)

Median Query Time: 50% reduction

95% of Requests: Below 300ms

Compute (vCPUs): 122 vCPUs (before), 196 vCPUs available now

Hardware Cost: Less than $20,000 per machine, amortized to $333/month over 5 years

This is no longer a surprise news, we keep hearing about companies either completely or partially exiting from the cloud:

My Hero in this context is definitely “Hivekit”

They not only saved 98% in cloud costs but also ended up writing their own database.

HiveKit is a platform that tracks thousands of vehicles and people, each sending location updates every second. Imagine having 13,000 vehicles constantly pinging you with data. Now multiply that by a full month, and you’ve got 3.5 billion updates.

They were using AWS Aurora with the PostGIS extension to store all this geospatial data. Aurora worked well initially, handling the load, but as HiveKit’s data grew, so did their AWS bills. They were burning through $10k a month just on the database, and it was only going to get worse.

At this point, most companies would either negotiate with their cloud provider or maybe try to optimize their queries. Not HiveKit. They took a different approach—they built their own.

Why Build Their Own Database?

So why go through the pain of building a database from scratch when there are so many good ones out there? Well, HiveKit had very specific needs. They didn’t just want a regular database; they needed something that could:

Handle insane write performance – Their system needed to support 30,000 location updates per second across multiple nodes.

Parallelism without limits – They wanted as many nodes as possible to write simultaneously without locking issues or performance degradation.

Minimal storage footprint – With 3.5 billion updates each month, storage had to be extremely efficient.

HiveKit chose to control their data destiny. They built an in-process storage engine that writes data in a delta based binary format, perfectly optimized for their needs.

How Did HiveKit Do It?

Delta Based Format: Every 200 writes, HiveKit stores the full state of an object; between those, only changes (deltas) are recorded. This reduces a location update to just 34 bytes, fitting 30 million updates into 1GB of storage.

Smart Indexing: A separate index file converts object IDs into 4-byte identifiers, speeding up lookups from 2 seconds to 13 milliseconds.

Batching Updates: Instead of writing updates continuously, they batch and write once per second, reducing write operations and cutting storage costs.

The results? 98% savings on their cloud costs, plus faster queries and reduced overhead. It’s not just about cutting costs; they now have a system built exactly for their needs.

Closing Thoughts:

"As per the latest Citrix report, 42% of U.S. organizations are considering or have moved half of their cloud workloads back on-premises."

Future is going to be multi cloud and hybrid cloud.

Don’t throw it away when you get a chance to work on on prem.

Don’t stick to one cloud expertise, become an expert in one and grow in at least two more.

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)

Looking to promote your company, product, service, or event to 57,000+ DevOps and Cloud Professionals? Let's work together.