- TechOps Examples

- Posts

- How to Manage S3 Server Access Logs at Massive Scale

How to Manage S3 Server Access Logs at Massive Scale

TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities and articles.

👋 👋 A big thank you to today's sponsor THE HUSTLE DAILY

200+ AI Side Hustles to Start Right Now

While you were debating if AI would take your job, other people started using it to print money. Seriously.

That's not hyperbole. People are literally using ChatGPT to write Etsy descriptions that convert 3x better. Claude to build entire SaaS products without coding. Midjourney to create designs clients pay thousands for.

The Hustle found 200+ ways regular humans are turning AI into income. Subscribe to The Hustle for the full guide and unlock daily business intel that's actually interesting.

IN TODAY'S EDITION

🧠 Use Case

How to Manage S3 Server Access Logs at Massive Scale

👀 Remote Jobs

0G Labs is hiring a DevOps Engineer

Remote Location: Worldwide

Reach Security is hiring a DevOps Engineer

Remote Location: Worldwide

📚️ Resources

If you’re not a subscriber, here’s what you missed last week.

To receive all the full articles and support TechOps Examples, consider subscribing:

🧠 USE CASE

How to Manage S3 Server Access Logs at Massive Scale

The most practical challenges cloud engineers face with S3 server access logging show up only at scale:

No clear answer to who accessed which object and when

Exploding S3 request costs with no service level attribution

Access denied issues that are impossible to trace across roles

Security incidents where object level access history is missing

Compliance reviews asking for evidence that does not exist

Raw access logs costing more to store than the data itself

At this point, logging is not the problem. Managing S3 server access logs at massive scale is. You cannot confidently debug access failures, investigate incidents, or safely delete old data.

Recently, I came across the engineering setup at Yelp, where they addressed managing S3 server access logs at massive scale effectively.

Before getting into that context, here’s how a sample S3 server access log looks:

79a59df923b1a2b3 techops-examples-bucket [23/Dec/2025:04:32:18 +0000] 54.240.196.186 -

A1B2C3D4E5 REST.GET.OBJECT logs/app.log

"GET /logs/app.log HTTP/1.1" 200 - 1048576 1048576 32 31 "-"

"Mozilla/5.0 (Linux x86_64)"

abcdEFGHijklMNOPqrstUVWXyz0123456789=

TLS_AES_128_GCM_SHA256 s3.ap-south-1.amazonaws.com TLSv1.3 - -Bucket name:

techops-examples-bucketObject key:

logs/app.logOperation:

REST.GET.OBJECTTimestamp:

23 Dec 2025 04:32:18 UTCSource IP:

54.240.196.186Request ID:

A1B2C3D4E5HTTP status:

200 (success)Bytes transferred:

1048576

This is the typical level of detail engineers actually read when working with S3 server access logs in real systems.

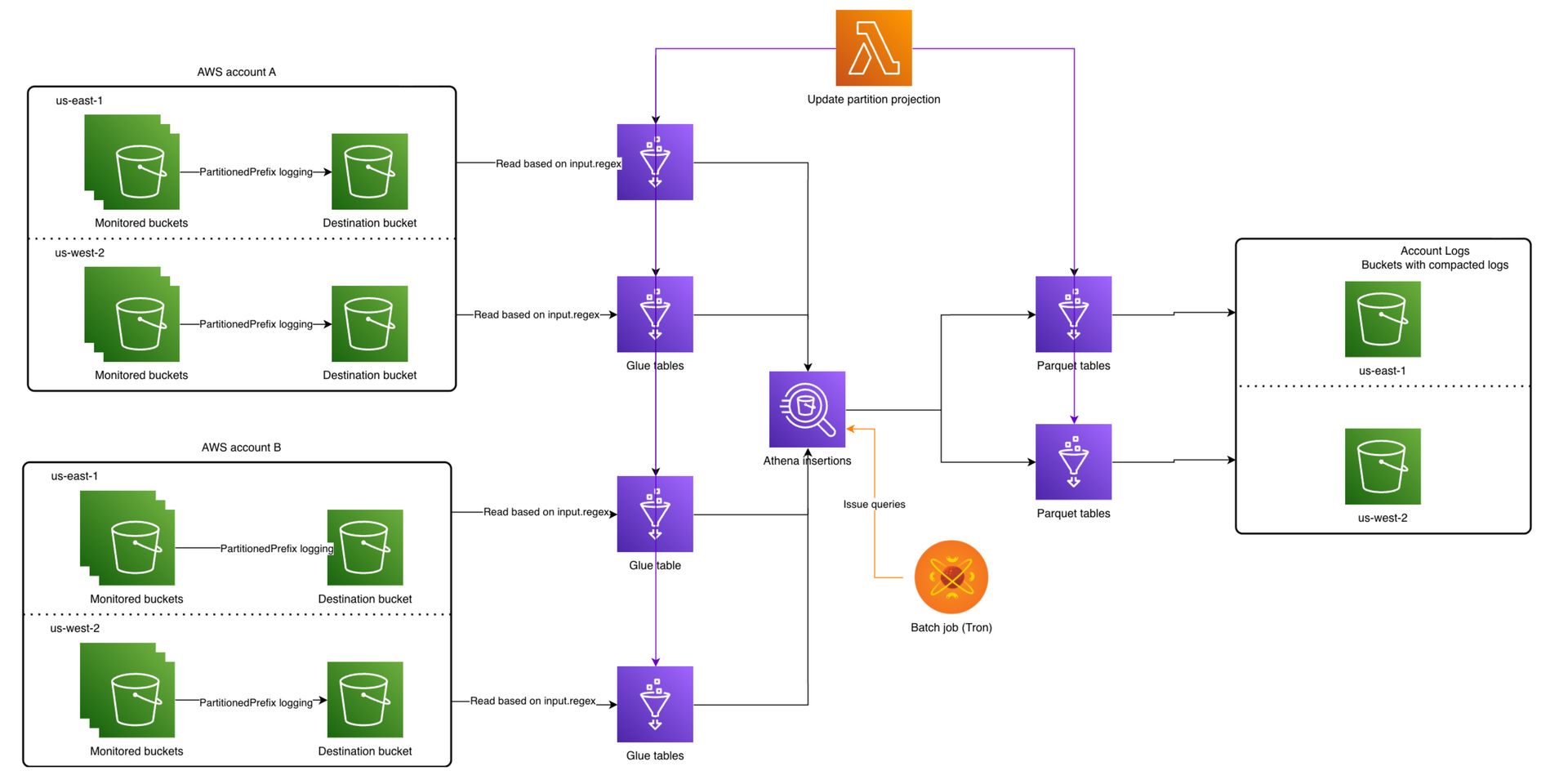

Architecture

At scale, S3 server access logs are treated as a data pipeline, not files to query.

Ref: Yelp

Multiple AWS accounts and regions write S3 server access logs to destination buckets using

PartitionedPrefixRaw logs stay in the same region to avoid cross region charges

Glue tables sit on top of raw logs and read them using

input.regexRaw logs are not queried for analytics or investigations

Athena is used only for INSERT operations to compact logs

Compacted data is written as Parquet tables into centralized account level log buckets

Parquet tables become the single source of truth for querying

This design ensures raw logs remain cheap and short lived, while queries stay fast and predictable.

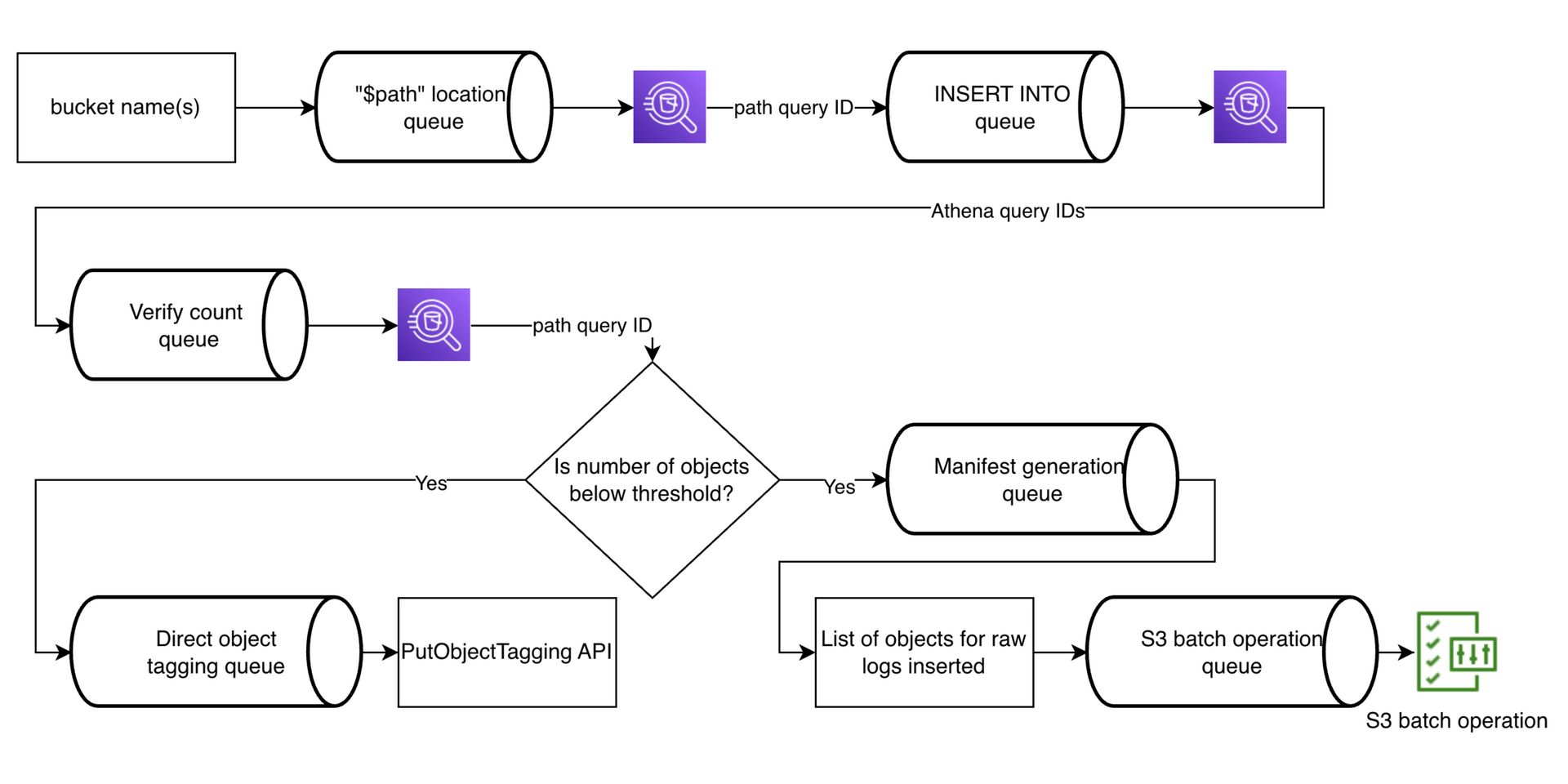

How queue processing fits into this architecture

Because Athena and S3 are shared and failure prone at scale, compaction cannot be synchronous or manual.

Ref: Yelp

All Athena queries are issued through queues, not directly

$pathqueries first discover raw log object locationsINSERT queries compact logs into Parquet and are retry safe

Query IDs are tracked to correlate discovery, insertion, and verification

Verification happens before any cleanup is triggered

Raw log cleanup is decoupled from compaction

Low volume logs are tagged directly

High volume logs are tagged using S3 Batch Operations

Lifecycle policies handle deletion automatically

Together, the architecture and queue driven execution make S3 server access logging operationally safe, cost efficient, and scalable as bucket count and request volume grow.

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)