TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👋 Before we begin... a big thank you to today's sponsor HUBSPOT

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

👀 Remote Jobs

Specular is hiring a DevOps Engineer

Remote Location: Worldwide

Panoptyc is hiring a Cloud Systems Specialist (AWS)

Remote Location: Worldwide

📚 Resources

Looking to promote your company, product, service, or event to 47,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

Kubernetes Traffic Control Patterns

One of the first things you learn in Kubernetes is that Pods don’t last forever. They can get rescheduled, restarted, or replaced without warning. So when you want to expose an application running inside the cluster, you don’t point traffic directly to a Pod. You go through a Service.

A Kubernetes Service provides a stable network identity by selecting Pods using labels and exposing them through a consistent endpoint and follows a layered access model.

ClusterIP: Exposes the service on an internal IP accessible only within the Kubernetes cluster, used for inter pod communication.

NodePort: Exposes the service on a static port across all cluster nodes, allowing external access via any node’s IP and that port.

LoadBalancer: Provisions an external load balancer that forwards traffic to NodePort services, providing a single external IP for client access.

Here are four traffic control patterns you’ll often see:

Access With NodePort

Access With Load Balancer

Ingress Controller managing service routing

Load Balancer combined with Ingress Controller

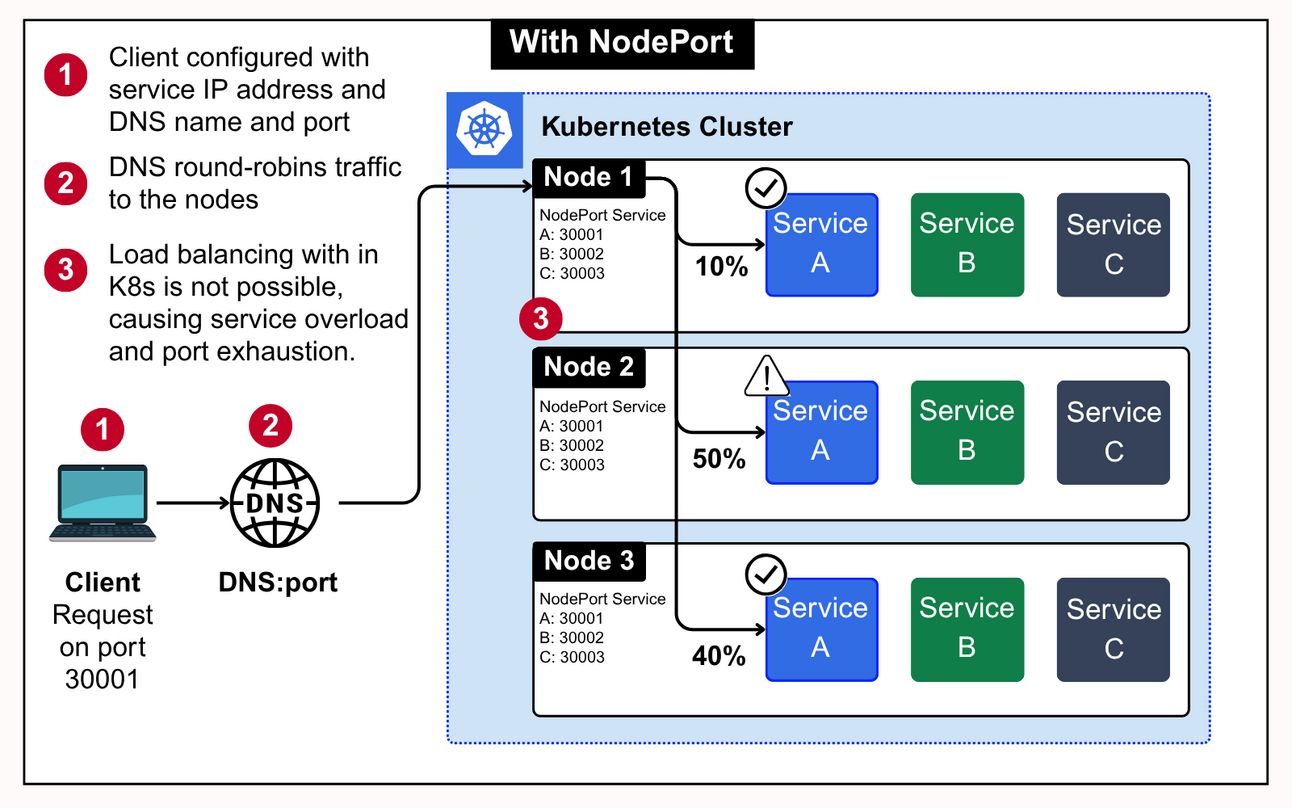

1. Access With NodePort

You’ll usually see this pattern in on prem clusters or early stage cloud setups where a full LoadBalancer integration isn’t available.

But here’s what you really need to know:

NodePort isn’t real load balancing. DNS round robin may seem like a distribution method, but it doesn’t account for node health or service level metrics. If one node is overloaded or down, traffic still gets routed to it.

Clients need to know node IPs and ports. This breaks abstraction. If your client or API gateway is outside Kubernetes, you’re hardcoding infrastructure details into configs. Bad for portability.

Overloaded nodes aren’t just inefficient, they cause failure. Because Kubernetes can’t rebalance incoming traffic across healthy nodes, one node taking the hit can result in failed connections even if pods are healthy on other nodes.

Port range limits matter. You only get 2767 usable ports in the default NodePort range (30000–32767). In clusters with many services or aggressive blue-green deployments, it’s easy to run out.

No SSL termination, DNS based routing, or URL mapping. You’ll have to bolt on those capabilities manually or rely on external proxies, which adds complexity.

I am giving away 50% OFF on all annual plans of membership offerings for a limited time.

A membership will unlock access to read these deep dive editions on Thursdays and Saturdays.

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archieve of 175+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session