TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👀 Remote Jobs

Coalesce is hiring a Senior DevOps Engineer

Remote Location: EMEA

Truelogic is hiring a Senior DevOps Engineer

Remote Location: LATAM

📚 Resources

Looking to promote your company, product, service, or event to 58,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

Kubernetes etcd Crash Course for DevOps Engineers

In Kubernetes, etcd is a distributed, consistent key value store that holds the entire state of the cluster. Every component in Kubernetes relies on etcd through the API server to know what should be running and what is actually running.

What etcd Stores

Pod specifications, Deployments, Services, ConfigMaps, Secrets, and other Kubernetes resources.

Status information such as pod conditions, node health, and workloads running state.

Policies and cluster wide metadata including RBAC roles, quotas, and namespaces.

How etcd Works

Etcd operates using a consensus algorithm called RAFT to maintain consistency across the distributed nodes.

In an etcd cluster, one node is elected as the leader, while the others are followers. The leader handles all write requests and propagates these changes to the followers to ensure data consistency across the cluster.

If the leader fails, a new leader is elected from the remaining nodes to maintain cluster operations without downtime.

Role in Pod Lifecycle

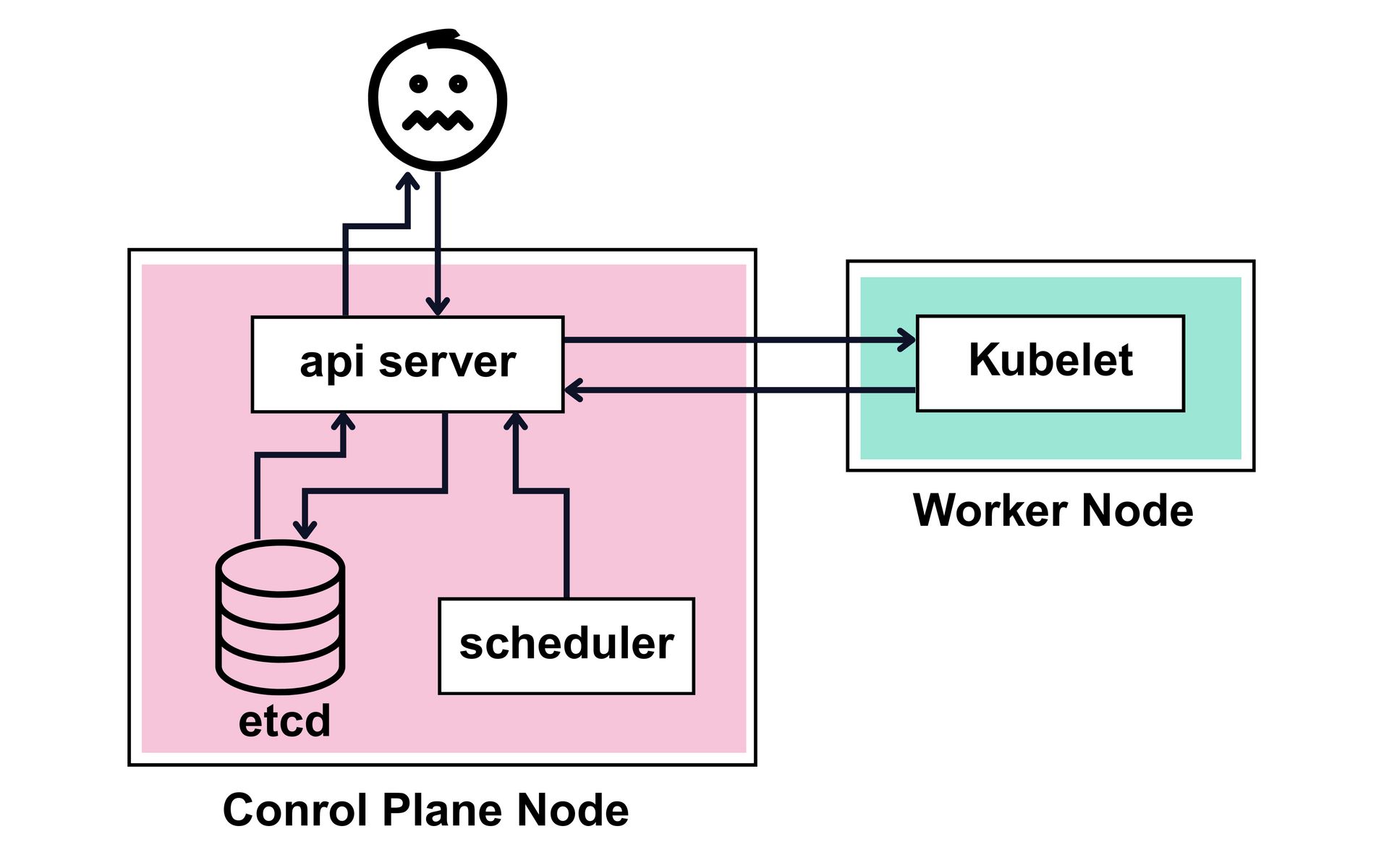

When you create a Pod using kubectl, the API server validates the request and writes the Pod specification into etcd. The scheduler then assigns a node and updates etcd with this decision.

The kubelet on the chosen node reads the assigned Pod and reports its status back through the API server, which again updates etcd.

This loop of desired state and observed state is always reconciled against the data in etcd.

Every change in the cluster is written to etcd through the API server. Controllers and kubelets do not talk to each other directly.

They interact only with the API server

Let’s talk about etcd locks - a tool that can prevent disasters but, if misused, can also cause bottlenecks.

Why Use etcd Locking?

Imagine two processes (let’s say two controllers) trying to update the same resource in etcd at the same time.

Race conditions can lead to inconsistent state - one process overwrites another’s update, leaving your cluster in a weird half applied state.

Locking prevents this. It ensures only one process at a time gets to modify a key, avoiding conflicts and data corruption.

This sample illustrates etcdctl command hanging indefinitely while trying to access etcd endpoints.

This usually happens when etcd is overloaded, locked by another process, or experiencing network failures.

With this basic understanding, let us deep dive into

How to Use etcd Locking

When & Where to Use etcd Locking

etcd Deployment Types

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archive of 250+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session