- TechOps Examples

- Posts

- Kubernetes Anti Patterns and Solutions

Kubernetes Anti Patterns and Solutions

TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👋 Before we begin... a big thank you to today's sponsor 1440

Looking for unbiased, fact-based news? Join 1440 today.

Join over 4 million Americans who start their day with 1440 – your daily digest for unbiased, fact-centric news. From politics to sports, we cover it all by analyzing over 100 sources. Our concise, 5-minute read lands in your inbox each morning at no cost. Experience news without the noise; let 1440 help you make up your own mind. Sign up now and invite your friends and family to be part of the informed.

👀 Remote Jobs

Gruntwork is hiring a AWS Subject Matter Expert

Remote Location: Worldwide

EverOps is hiring a Principal DevOps Engineer

Remote Location: Worldwide

📚️ Resources

Looking to promote your company, product, service, or event to 52,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

Kubernetes Anti Patterns and Solutions

Setting up a Kubernetes environment is far easier than running and optimizing it. In my 8+ years with Kubernetes, I have seen even mature companies fall into anti patterns. These are not beginner mistakes. Even the best practitioners repeat them, especially under scale, pressure, or team churn.

The top anti patterns I repeatedly see:

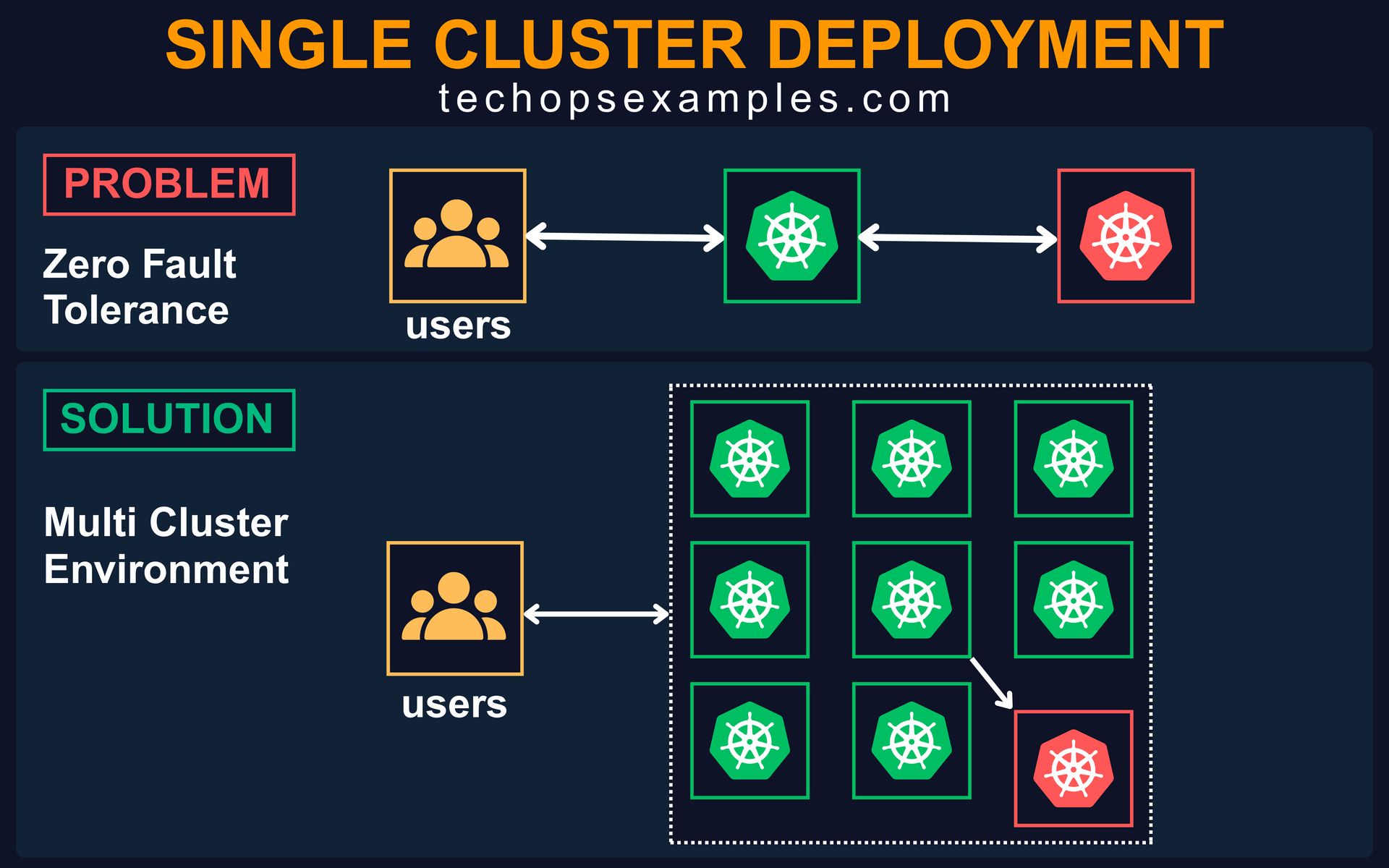

1. Single Cluster Deployment

Teams often start with a single production cluster and keep scaling everything into it (services, workloads, secrets, users, and integrations). It works fine until it doesn't.

In real world scenarios, I’ve seen how a single issue like a control plane outage, faulty node group update, or a bad deployment can take down the entire system. There is zero fault tolerance. There is no blast radius control.

Even large scale companies with strong DevOps teams end up routing all production traffic to a single cluster due to operational convenience. But convenience breaks under pressure.

What usually goes wrong:

Cluster goes unreachable during upgrade or KMS issue

One tenant affects others in a multi tenant shared cluster

Region specific outage takes down global access

No way to isolate or failover in critical production moments

Adopt a Multi Cluster Architecture:

Split production workloads across two or more regional clusters

Use DNS level routing or Global Load Balancers (like AWS Route 53, GCLB, Akamai) to handle failover and traffic steering

If SLA is critical, design clusters per business unit or per customer tier

Back up cluster state regularly using tools like Velero

Operational Tips:

Start with two clusters if budget allows, test multi cluster from Day 1

Set up observability and alerting per cluster (not globally)

Practice failover once every quarter

Avoid hardcoding cluster names or URLs into CI/CD pipelines or secrets

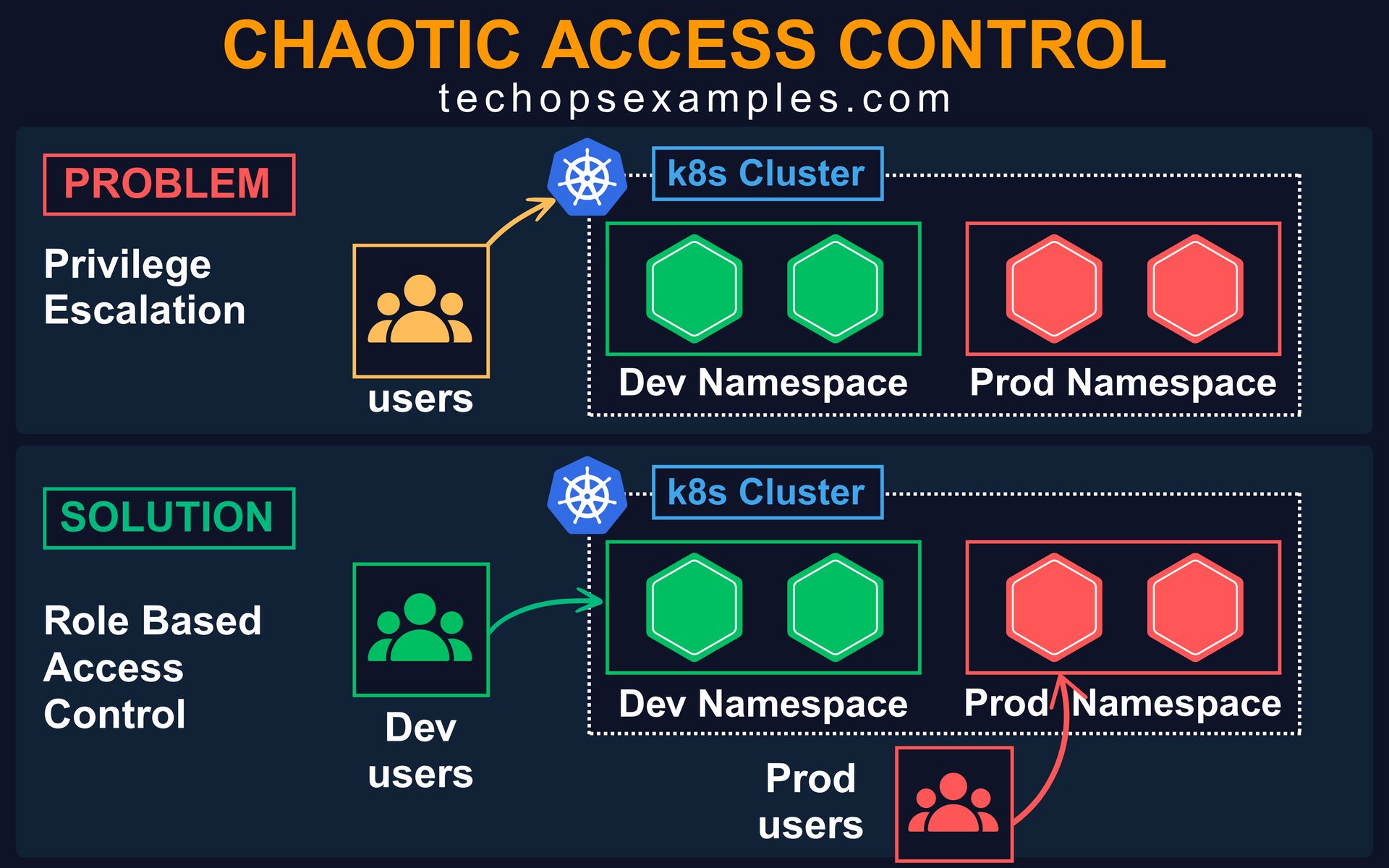

2. Chaotic Access Control

Giving broad cluster access for convenience is a dangerous habit. Devs accidentally access or delete production resources because RBAC was loosely configured.

This usually happens when teams share the same cluster for dev and prod, and use ClusterRoleBinding to quickly unblock access. Over time, there's no clear boundary between environments, and no visibility into who can do what.

Fix it with namespace scoped RBAC:

Use Role and RoleBinding instead of cluster wide bindings

Create separate access policies for dev, stage, and prod

Group users and bind roles to those groups

Only grant the minimum permissions needed

Practical Hygiene:

Run kubectl get rolebindings --all-namespaces regularly

Use tools like rakkess or kubectl-who-can

Never use system:masters for human users

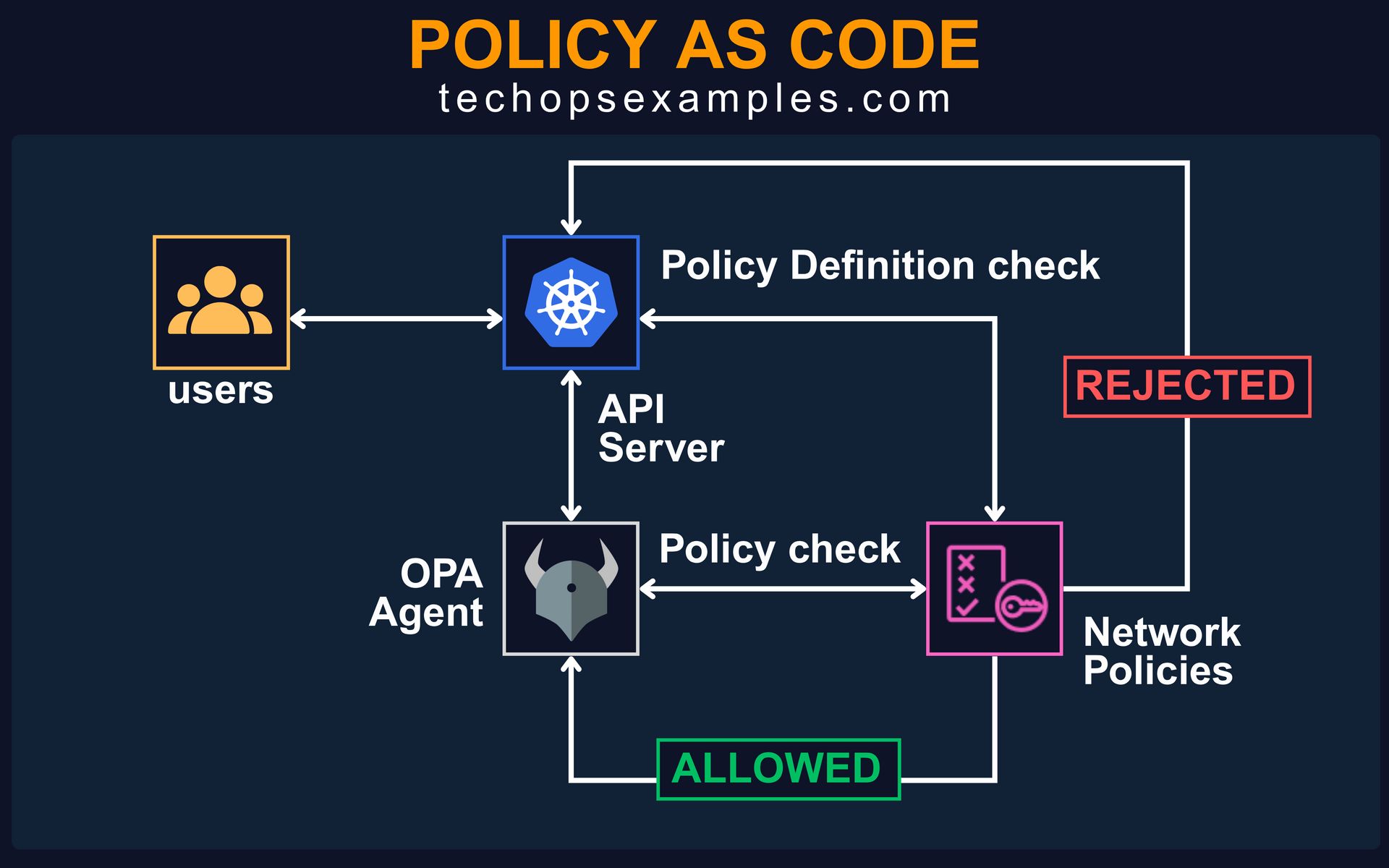

3. No Policy As Code

Manual reviews and verbal guidelines are not security. In many teams, enforcement of naming conventions, image registries, host paths, and privilege flags is done through Slack messages or code reviews. That does not scale. It also does not prevent mistakes.

I am giving away 50% OFF on all annual plans of membership offerings for a limited time.

A membership will unlock access to read these deep dive editions on Thursdays and Saturdays.

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

Already a paying subscriber? Sign In.

Paid subscriptions get you:

- • Access to archive of 200+ use cases

- • Deep Dive use case editions (Thursdays and Saturdays)

- • Access to Private Discord Community

- • Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- • Quarterly 1:1 'Ask Me Anything' power session