- TechOps Examples

- Posts

- How to Use Ansible Dynamic Inventory Effectively

How to Use Ansible Dynamic Inventory Effectively

TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👀 Remote Jobs

1001 is hiring a DevOps Engineer

Remote Location: Worldwide

Metabase is hiring a Senior SRE/DevOps Engineer

Remote Location: Worldwide

📚️ Resources

Looking to promote your company, product, service, or event to 50,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How to Use Ansible Dynamic Inventory Effectively

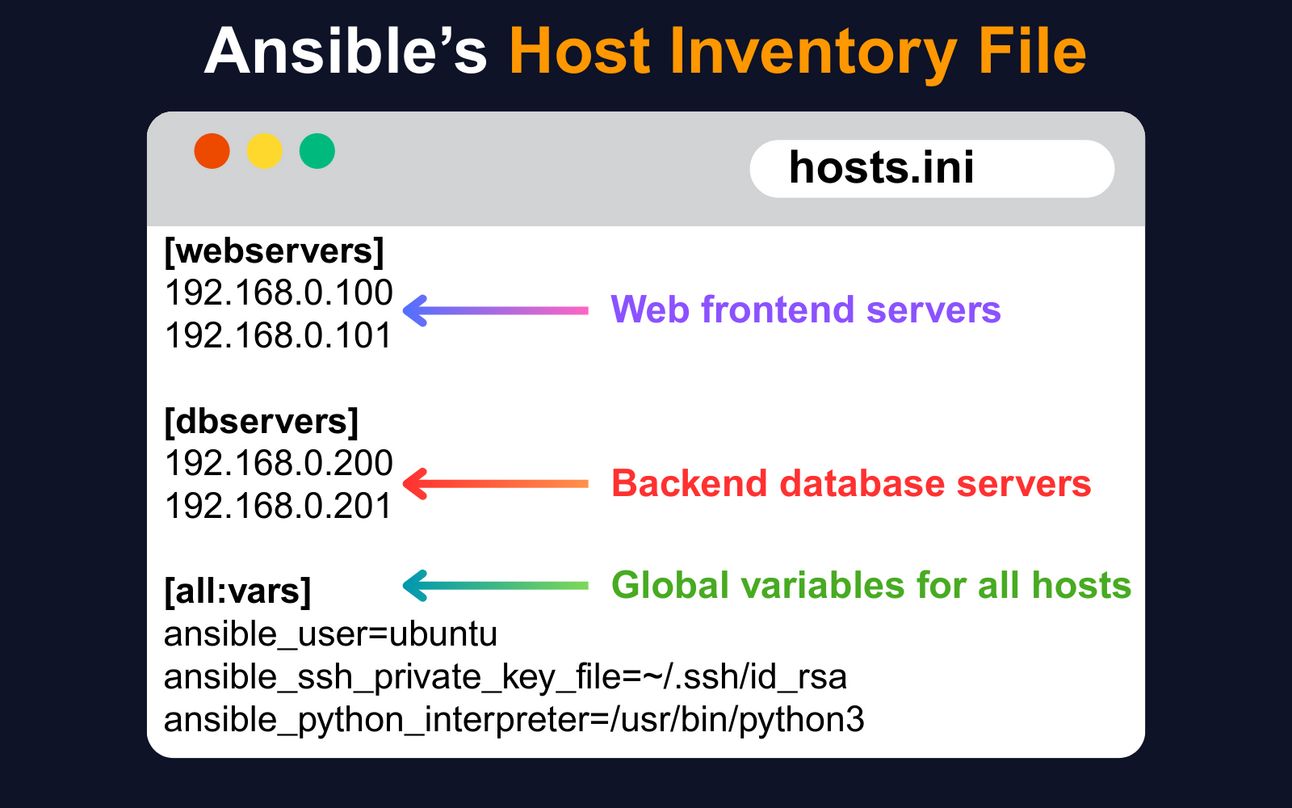

When you run anything in Ansible, it needs to know which machines to connect to. These machines are listed in what’s called an inventory file. A basic inventory file in INI format can look like this:

You can group servers by purpose, like webservers or databases. The [all:vars] section defines values that apply to every host, like the SSH user and key.

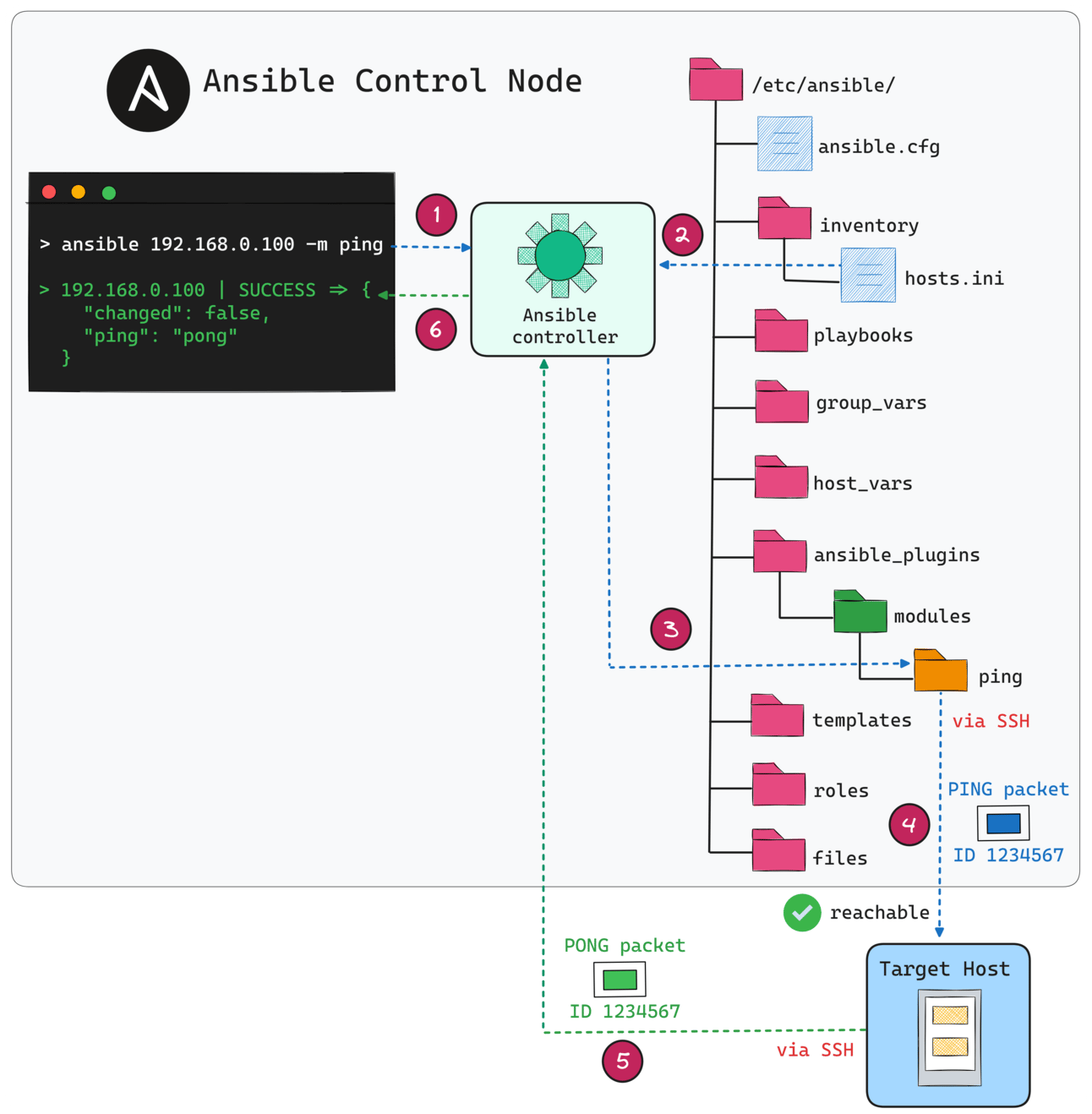

Ansible connects to remote machines over SSH to run tasks. Once the inventory is set, you can run an ansible ping command to verify SSH connectivity and confirm that Ansible can reach the target machine before running any actual tasks.

ansible 192.168.0.100 -m pingHere’s what actually happens:

Ansible CLI receives the command with the target host and ping module

It consults the inventory file and loads relevant host details and variables.

The Ansible controller uses SSH to connect to the target machine.

The ping module is pushed temporarily to the host.

The host executes it and sends a JSON response like {"ping": "pong"}.

This result is sent back to your terminal, confirming connectivity and configuration.

It’s simple and powerful, but in dynamic environments, static host files don’t scale.

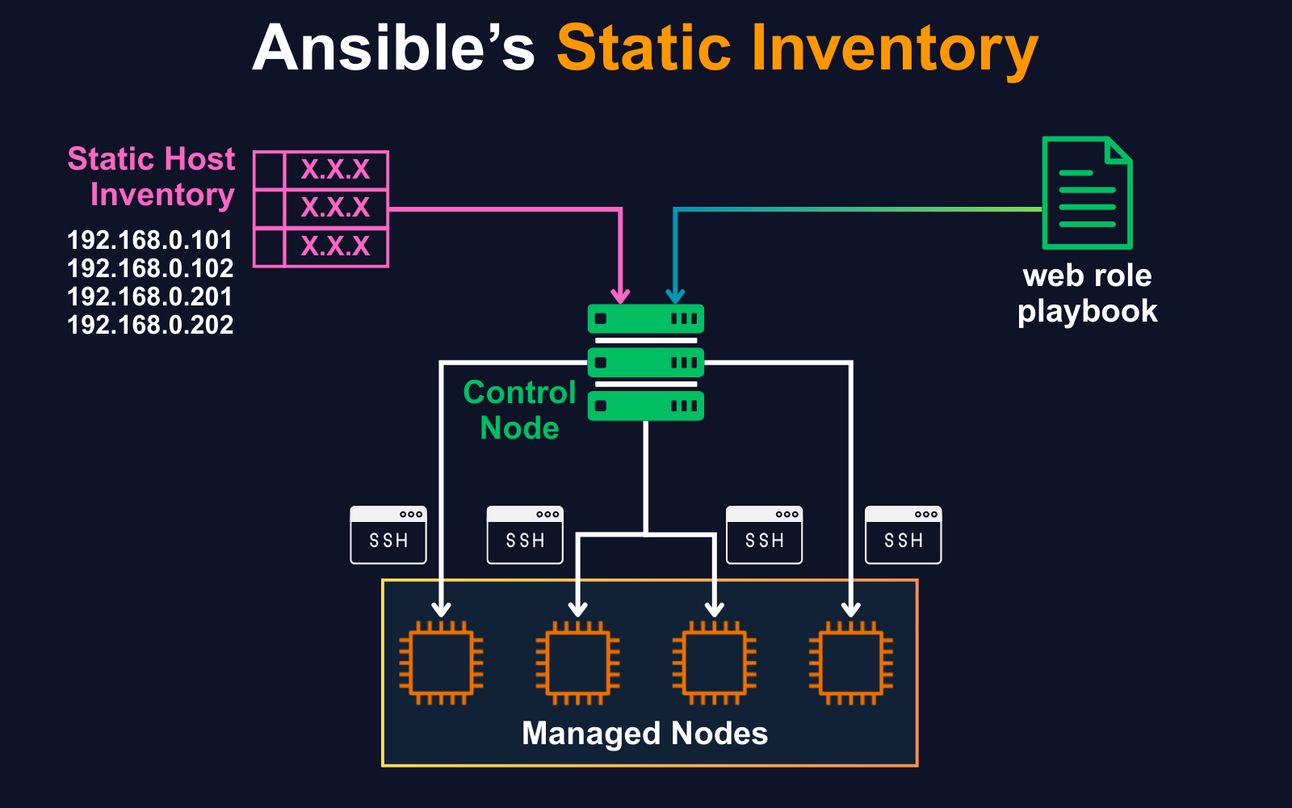

The control node is the machine where you install and run Ansible.

A managed node is any remote machine that Ansible connects to and automates using SSH.

Static Inventory

Static inventory might work when you have 5 to 10 servers. But once you're working with real infrastructure in AWS, Azure, or GCP, things move fast. New servers get added during scaling, test environments spin up temporarily, and some machines are shut down nightly to save cost.

You either:

Manually update hosts.ini every time

Or accept that Ansible may target stale or nonexistent machines

Both are bad options in production.

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

Already a paying subscriber? Sign In.

Paid subscriptions get you:

- • Access to archieve of 175+ use cases

- • Deep Dive use case editions (Thursdays and Saturdays)

- • Access to Private Discord Community

- • Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- • Quarterly 1:1 'Ask Me Anything' power session