- TechOps Examples

- Posts

- How to Fix Kubectl Access Not Working in EKS

How to Fix Kubectl Access Not Working in EKS

TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities and articles.

👋 Before we begin... a big thank you to today's sponsor LONG ANGLE

A Private Circle for High-Net-Worth Peers

Long Angle is a private, vetted community for high-net-worth entrepreneurs, executives, and professionals across multiple industries. No membership fees.

Connect with primarily self-made, 30-55-year-olds ($5M-$100M net worth) in confidential discussions, peer advisory groups, and live meetups.

Access curated alternative investments like private equity and private credit. With $100M+ invested annually, leverage collective expertise and scale to capture unique opportunities.

If you’re not a subscriber, here’s what you missed last week.

To receive all the full articles and support TechOps Examples, consider subscribing:

IN TODAY'S EDITION

🧠 Use Case

How to Fix Kubectl Access Not Working in EKS

🚀 Top News

👀 Remote Jobs

Quokka is hiring a DevOps Engineer

Remote Location: Worldwide

Token Metrics is hiring a DevOps/Site Reliability Engineer

Remote Location: Global Remote-Non US

📚️ Resources

🧠 USE CASE

How to Fix Kubectl Access Not Working in EKS

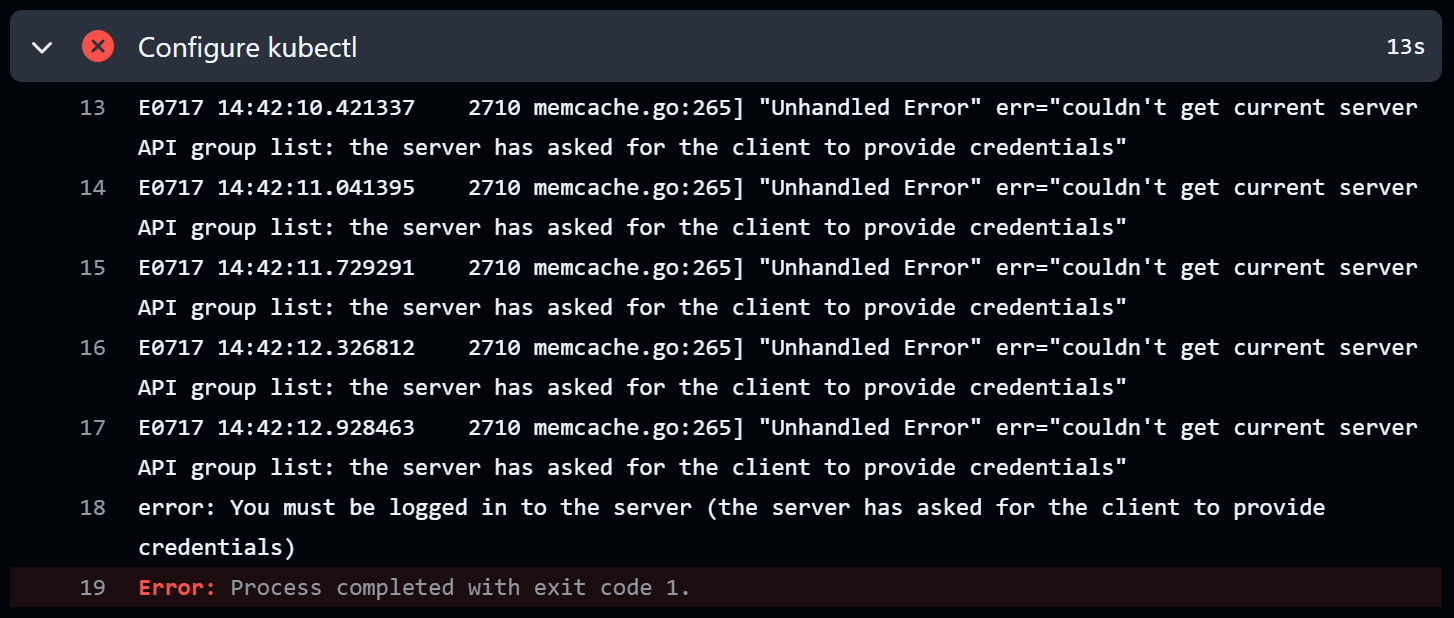

So you’ve set up your EKS cluster, ran your GitHub Actions pipeline (or maybe a kubectl command from your terminal) and hit this:

"Unhandled Error" err="couldn't get current server API group list: the server has asked for the client to provide credentials"

This usually happens because kubectl cannot authenticate with your EKS cluster.

And that’s usually caused by:

Your AWS credentials have expired.

The kubeconfig isn’t generated or is outdated.

You’re inside CI/CD and forgot to assume the right IAM role.

You’re using EKS Access Entries but the identity isn’t mapped.

The IAM identity running the command doesn’t have access to the cluster.

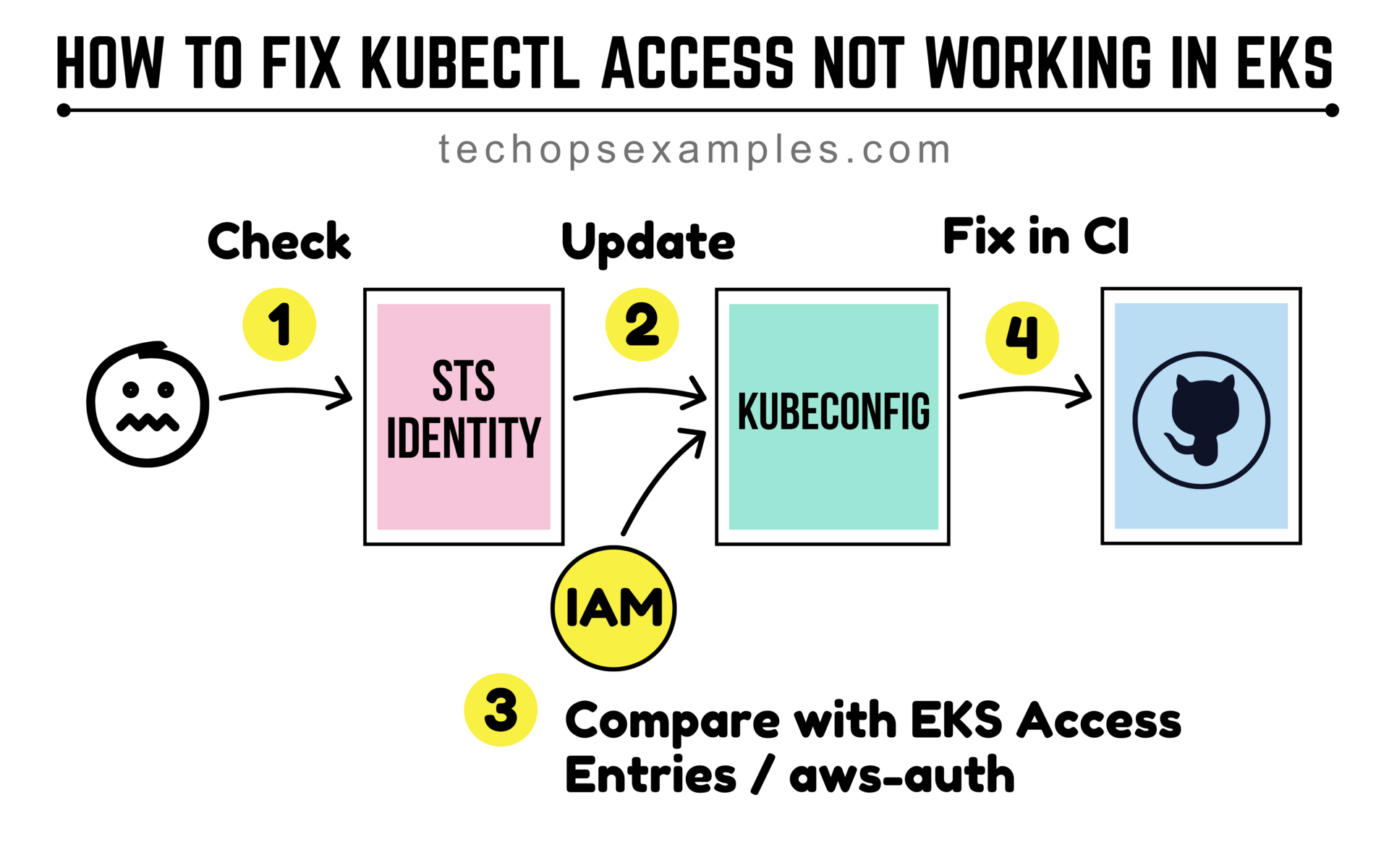

You can break this down into 4 parts. Here’s what I do when I hit this error:

1. Check the STS identity

Run this command to see which IAM identity is being used:

aws sts get-caller-identityIf this fails, your AWS credentials are broken or expired. In CI, make sure the workflow is assuming the right role.

2. Update your kubeconfig

Once AWS credentials are sorted, you need to tell kubectl how to talk to the EKS cluster:

aws eks update-kubeconfig --region <region> --name <cluster-name>This command populates your kubeconfig with the right token provider and endpoint. You can verify it worked with:

kubectl get svc3. Check if the IAM identity has EKS access

If you're using EKS Access Entries, run:

eksctl get accessentry --cluster <cluster-name>Look for your IAM user or role in the list.

If you're using the older aws-auth configMap method:

kubectl -n kube-system get configmap aws-auth -o yamlEnsure your IAM entity is mapped under mapRoles or mapUsers.

4. Fix it in CI/CD

If this error is in GitHub Actions or another CI tool, make sure you're running these steps before you use kubectl:

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: arn:aws:iam::<account-id>:role/<role-name>

aws-region: <aws-region>

- name: Update kubeconfig

run: aws eks update-kubeconfig --name <cluster-name> --region <aws-region>

- name: Verify access

run: kubectl get nodesThis ensures kubectl can connect to the cluster using the right identity.