TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

Just autoscaling isn’t enough to run enterprise grade Kubernetes systems.

You should have expertise in:

Security – protecting systems against unauthorized access and threats

Availability – ensuring systems are reliable and accessible as committed.

Kedify combines both, now independently validated by SOC 2 Type II certification.

👀 Remote Jobs

Alpaca is hiring a DevSecOps Engineer

Remote Location: Worldwide

Pragmatike is hiring a Backend/DevOps Engineer

Remote Location: Worldwide

📚 Resources

Looking to promote your company, product, service, or event to 58,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How Kubernetes Requests and Limits Really Work

If you're just getting into Kubernetes, one of the most misunderstood yet critical topics is resource management. Whether you’re deploying a single microservice or running a production grade system, how you configure CPU and memory can decide how stable, efficient, and secure your workloads are.

Let’s break it down from the basics and gradually build towards real-world application.

First, you need to know about ResourceQuotas.

A ResourceQuota sets hard limits on how much CPU, memory, and other resources a namespace can use. It prevents teams from overusing shared cluster resources and ensures fair access across environments.

How Kubernetes Applies Resource Quotas

The API server receives the pod request and runs admission checks.

The ResourceQuota plugin verifies if CPU and memory stay within namespace limits.

If over quota, the request is denied before scheduling.

If valid, usage is updated and the pod is linked to a service account.

The final state is saved in etcd for tracking and enforcement.

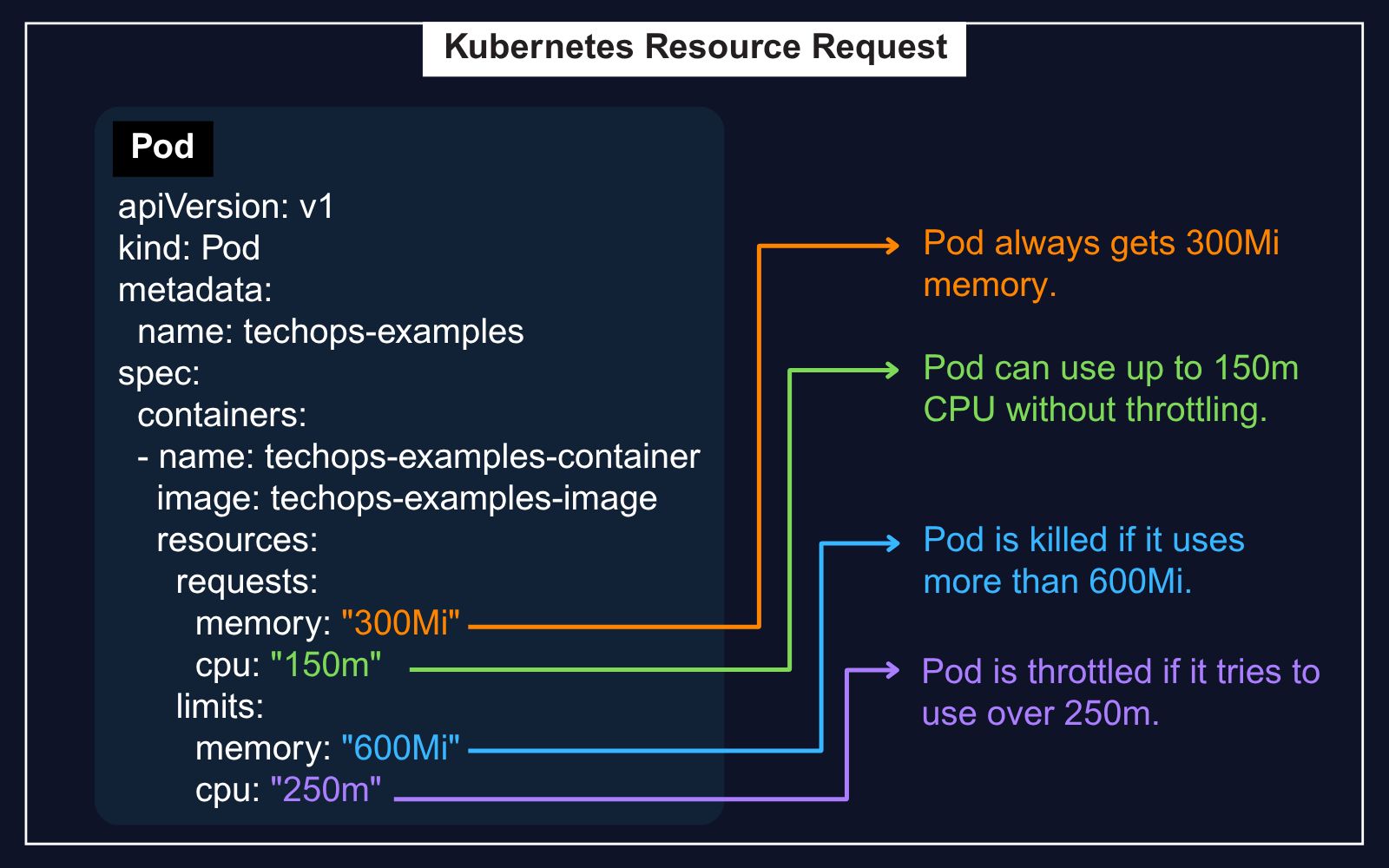

Requests and Limits - What They Really Mean

Containers run inside pods, and every container consumes compute resources. But you don’t just let them use as much as they want. You tell Kubernetes two things:

Request: This is the minimum resource the container is guaranteed. Kubernetes uses this during scheduling.

Limit: This is the upper cap. A container cannot exceed this.

Think of it like booking a hotel room.

The "request" is the space you block ahead of time.

The "limit" is the maximum you’re allowed to use. You can’t burst beyond it.

In case of CPU:

If usage exceeds the request but stays under the limit, the container runs, but may get throttled if the node is under pressure.

If it goes beyond the limit, it gets throttled instantly.

For memory:

If it uses more than requested, it's allowed, but if it crosses the limit, the pod is killed with an OOM (Out Of Memory) error.

A real world pod manifest look like this:

This kind of setup is essential in shared clusters. It avoids noisy neighbor issues and makes sure pods don’t hog all the resources.

Having established how requests, limits, and resource quotas work in Kubernetes, let’s discuss with visuals how they are structured per namespace and how they apply directly to pods and containers.

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archive of 250+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session