TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👀 Remote Jobs

PostHog is hiring a Infrastructure Engineer

Remote Location: Worldwide

Atlan is hiring a Site Reliability Engineer II

Remote Location: India

📚 Resources

Looking to promote your company, product, service, or event to 58,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How Kubernetes Connects Pods Using Services and DNS

When working with Kubernetes at scale, understanding how Pods are connected is more important than memorizing Service types. Most networking issues come from not knowing what Services and DNS actually do behind the scenes.

Once you see how Kubernetes routes traffic and resolves names internally, many production issues become easier to reason about.

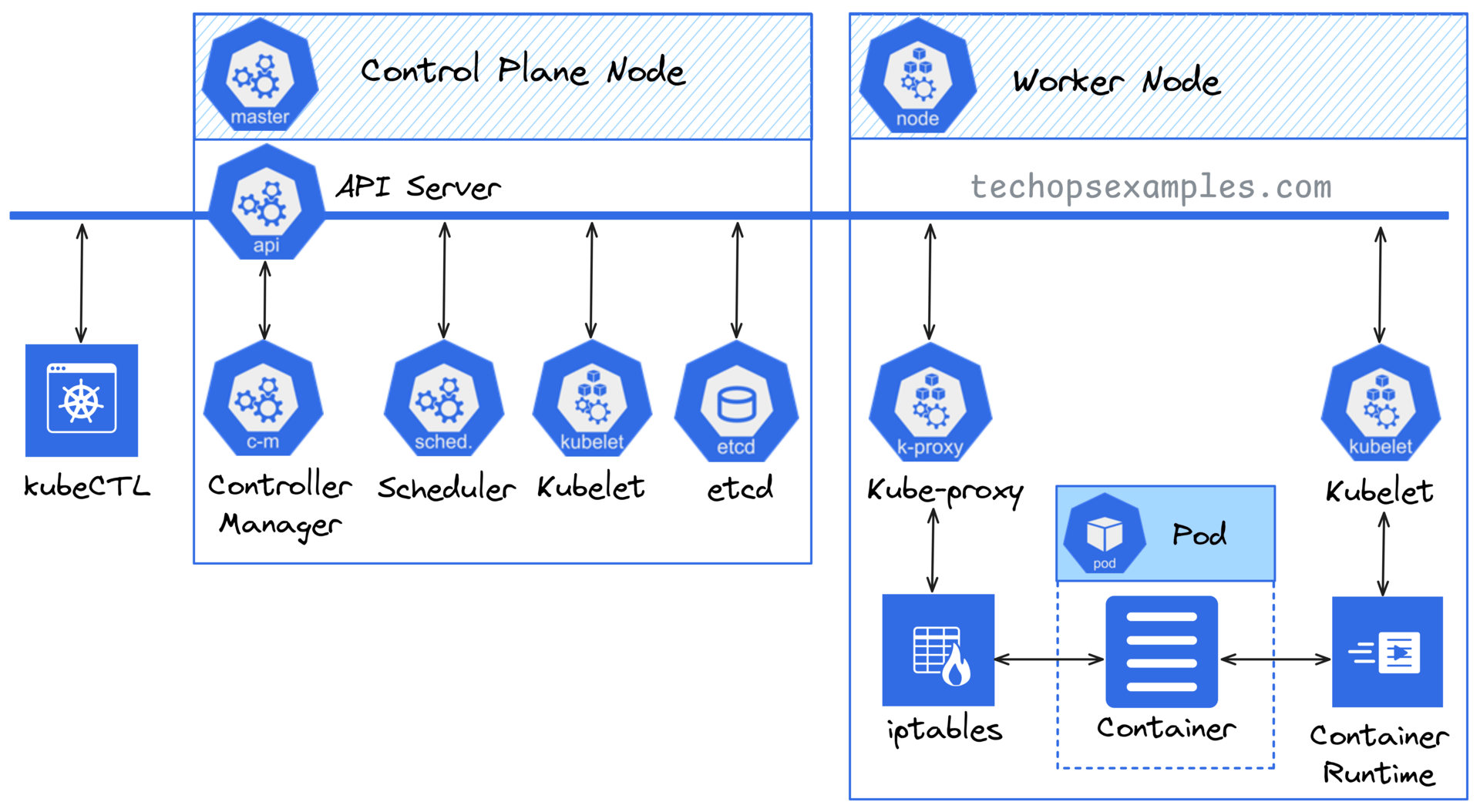

Let’s now get hands on and break down the architecture, making sense of the key components with the attached diagram.

Kubernetes Architecture

The Control Plane Node: The Brain 🧠

This is where the decision making happens. Every Kubernetes cluster has one or more control plane nodes that oversee everything in the cluster.

Here's how it all fits together:

API Server (api):

Think of it as the front desk of Kubernetes. Every kubectl command or internal component interaction goes through the API server. It validates your requests and routes them to the right place.

Controller Manager (c-m):

The automation genius. If your app's desired state (like 4 replicas) doesn’t match reality, the controller manager steps in to create, delete, or update resources.

Scheduler (sched):

New pod? Cool. The scheduler finds the best worker node for it, considering factors like resources, affinity, and taints. It's all about optimal placement.

etcd:

The brain's memory. This is a highly consistent key-value store that keeps track of everything in the cluster. If etcd is down, Kubernetes forgets the cluster's state.

kubelet on Control Plane:

Just like on worker nodes, the kubelet on the control plane ensures containers running here are healthy and up-to-date.

Worker Node: The Muscles 💪

While the control plane is busy planning and deciding, the worker nodes do the actual work.

Here's what happens under the hood:

kubelet:

The node's manager. It takes orders from the API server and ensures that containers (running inside pods) are healthy and doing what they're supposed to. It’s like the node's personal assistant.

Kube-proxy (k-proxy):

Handles networking. It ensures every pod can talk to other pods and services inside (and sometimes outside) the cluster. It uses iptables or similar tools to manage network rules.

Container Runtime:

This is what runs the actual containers. Whether it’s Docker, containerd, or CRI-O, it’s all about keeping your apps alive and isolated.

Pods and Containers:

Pods are the smallest deployable units in Kubernetes. Each pod wraps one or more containers and shares networking and storage. The containers inside do the heavy lifting running your application code.

This internal flow is the foundation for how Kubernetes connects Pods using Services and DNS.

How Kubernetes Schedules Pods and Starts Containers

Once an application is deployed, Kubernetes first focuses on getting the Pod running, not on networking.

A Pod definition is created and stored in the API Server

The Scheduler continuously watches for Pods that are not yet assigned to any node

Based on resource availability and constraints, the Scheduler selects a worker node

The selected node is recorded by updating the Pod state in the API Server

kubelet on that node notices the assignment and takes over execution

kubelet instructs the container runtime (containerd) to start the containers

The Pod status is then reported back to the API Server

At this stage, the application is running, but it is not yet reachable in a stable way.

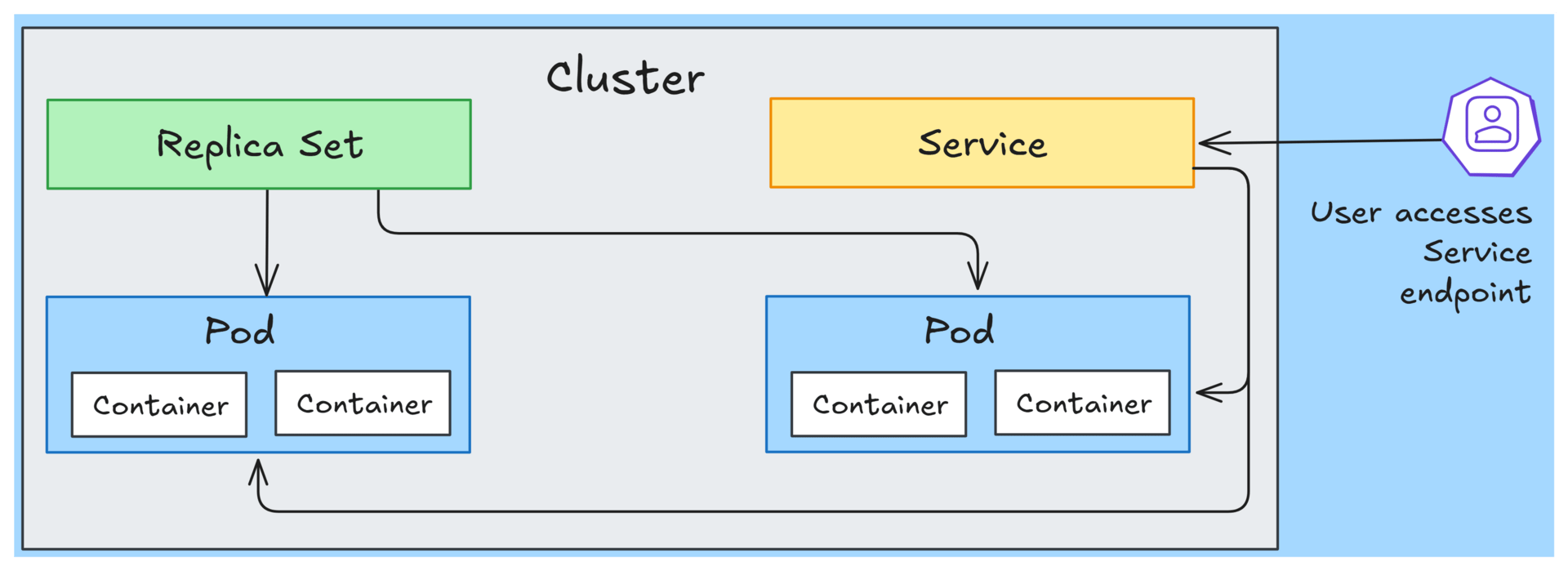

A Service introduces stability into an otherwise dynamic Pod world. While Pods are constantly created, deleted, or rescheduled, a Service exposes a stable virtual endpoint that does not change over time.

The Service does not talk to Pods directly, and it is not bound to a ReplicaSet or Deployment. Instead, it uses label selectors to dynamically identify which Pods are eligible to receive traffic at any moment.

When a user or another application accesses the Service endpoint, Kubernetes transparently load balances the request to one of the matching Pods inside the cluster. As Pods come and go, the Service remains unchanged, ensuring uninterrupted connectivity.

This abstraction is what lets Kubernetes separate application access from the constantly changing Pod lifecycle.

With this basic understanding, let us deep dive into

How ReplicaSets Drive Pod Creation and Scheduling in Kubernetes

How Services Are Translated into Endpoints and Network Rules in Kubernetes

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archive of 250+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session