TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case and remote job opportunities.

👋 Before we begin... a big thank you to today's sponsor HONEYBOOK

Unlock AI-powered productivity

HoneyBook is how independent businesses attract leads, manage clients, book meetings, sign contracts, and get paid.

Plus, HoneyBook’s AI tools summarize project details, generate email drafts, take meeting notes, predict high-value leads, and more.

Think of HoneyBook as your behind-the-scenes business partner—here to handle the admin work you need to do, so you can focus on the creative work you want to do.

👀 Remote Jobs

Canonical is hiring a MLOps Engineer

Remote Location: Worldwide

Sporty Group is hiring a Information Security Engineer

Remote Location: Worldwide

Looking to promote your company, product, service, or event to 47,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How Kubernetes Connection Pooling Works

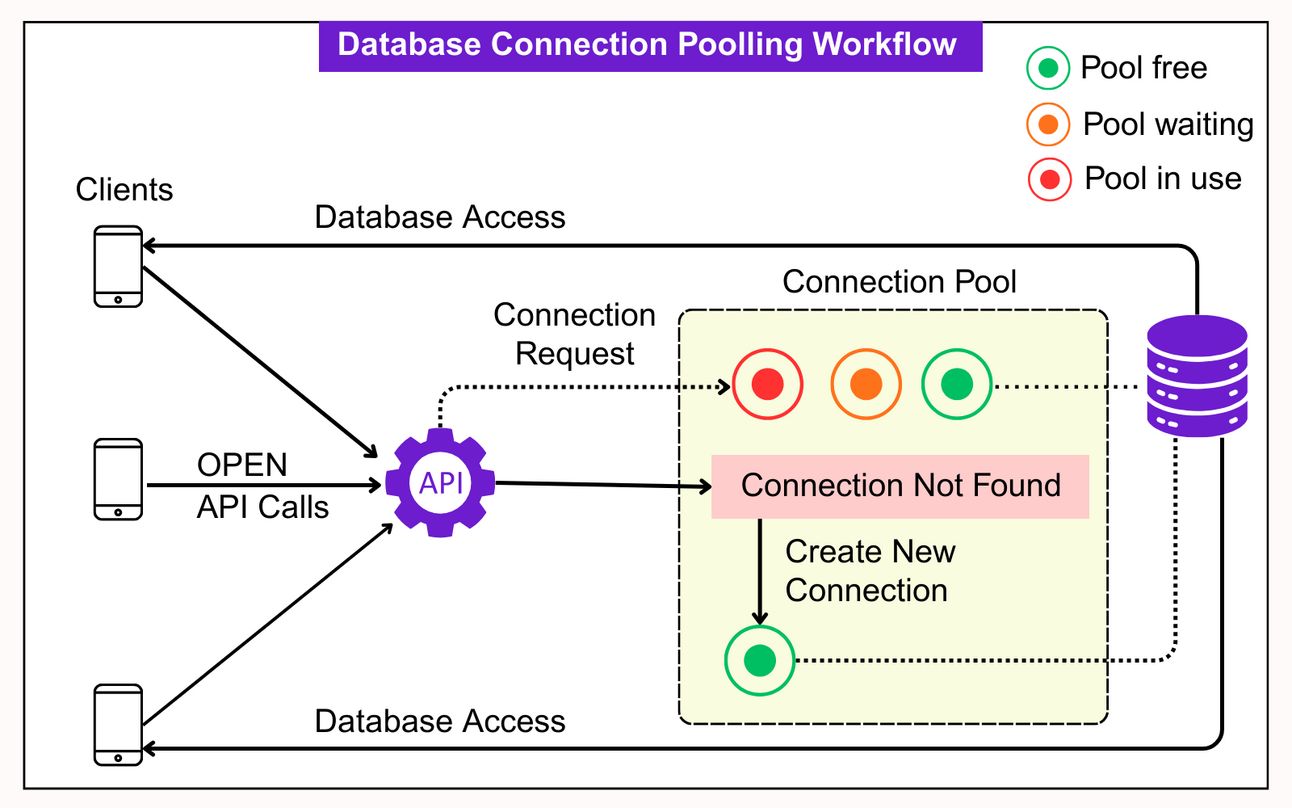

Database connection pooling is a method used to keep database connections open so they can be reused. And that reuse matters more than people assume.

Opening a fresh database connection is expensive. It’s not just TCP overhead. It includes authentication, authorization, SSL handshakes, and network latency, especially if the database is running outside the cluster. That’s why applications rely on connection pooling. Instead of opening a new connection for every request, they reuse existing ones.

Sounds simple.

The client makes an API call. That call checks if a valid connection already exists in the pool. If yes, it uses that connection. If not, a new one is created and added to the pool.

Once done, the connection is returned to the pool for future reuse. Connections that stay idle for too long may be closed to free up resources.

In many setups, teams run a connection pooler inside each application pod and move on. It works fine in local or single-node environments. But inside a Kubernetes cluster, things change. Each pod runs its own isolated pool. You are no longer managing one shared pool but many small, separate ones.

This creates real problems. Idle pods can hold open connections. Active pods may wait even when connections are free elsewhere. Databases hit connection limits without real usage spikes.

Here are four patterns you’ll often see in production:

Partial Pool Utilization

Full Pool Utilization

Connection Contention

All Pods Disconnected

I am giving away the one-time 50% OFF on all annual plans of membership offerings.

Ends in 24 hours.

1. Partial Pool Utilization

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archieve of 170+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session