TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👀 Remote Jobs

Growe is hiring a Senior DevOps Engineer

Remote Location: Worldwide

Panoptyc is hiring a Cloud Systems Specialist (AWS)

Remote Location: Worldwide

📚 Resources

Looking to promote your company, product, service, or event to 49,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How Cloud Disaster Recovery Works

Any disaster recovery setup starts with two things: RTO and RPO.

All design decisions like backups, failover systems, cost, and complexity depend on these two parameters. Ideally, your business goals decide what values are acceptable.

RPO (Recovery Point Objective): How much data can you afford to lose?

If your last good backup was 30 minutes ago, and you're okay losing that much data, your RPO is 30 minutes.

RTO (Recovery Time Objective): How quickly should everything be back up and running?

If you can tolerate 1 hour of downtime before things break badly, then your RTO is 1 hour.

With RTO and RPO defined, let’s look at the most common disaster recovery patterns you would find in the real world:

Backup and Restore

Pilot Light

Warm Standby

Multi Site

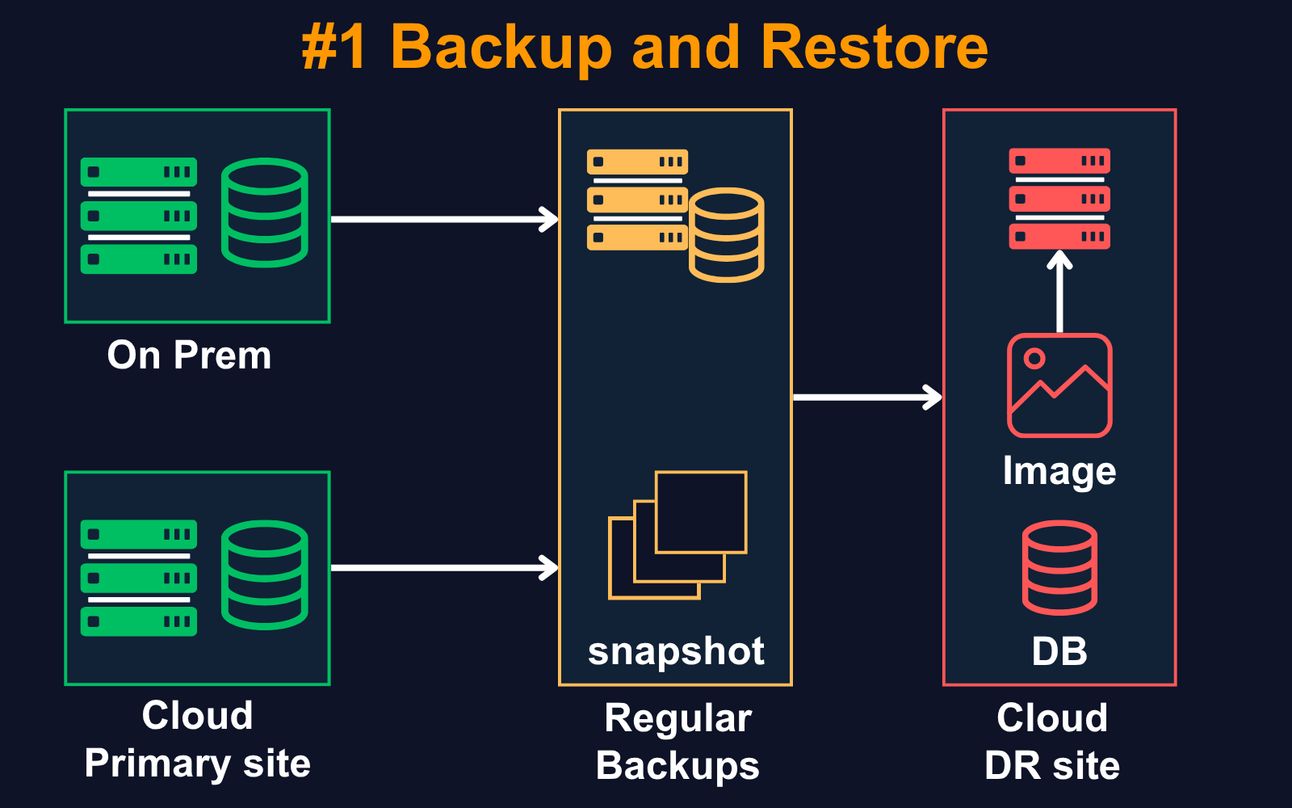

1. Backup and Restore

This is the simplest to understand and the most widely used pattern for disaster recovery in cost sensitive environments.

Typical RTO: Several hours to days

Typical RPO: Can vary from several hours to the last successful backup

You create regular backups of your data and systems. These backups are stored in durable storage like Amazon S3. Many teams use lifecycle policies to automatically move older backups to cheaper storage classes like Glacier or Deep Archive.

When a disaster occurs, you restore everything from these backups. That includes spinning up EC2 instances, restoring RDS snapshots, applying infrastructure configurations, and syncing application data.

This pattern involves no active infrastructure. Nothing runs until there is a failure.

That keeps the ongoing cost very low.

But recovery is slow and depends heavily on your process discipline.

For example, if you only back up application data but forget things like VPC configurations, IAM roles, or load balancer settings, your restore will break.

In AWS, this often means rebuilding networking and permissions from scratch before your app is even reachable.

Also, restoring large datasets can take time depending on your storage class. Glacier Deep Archive, for instance, can take up to 12 hours to retrieve backup files.

This approach is commonly used for internal tools, dev environments, or workloads where occasional downtime is acceptable.

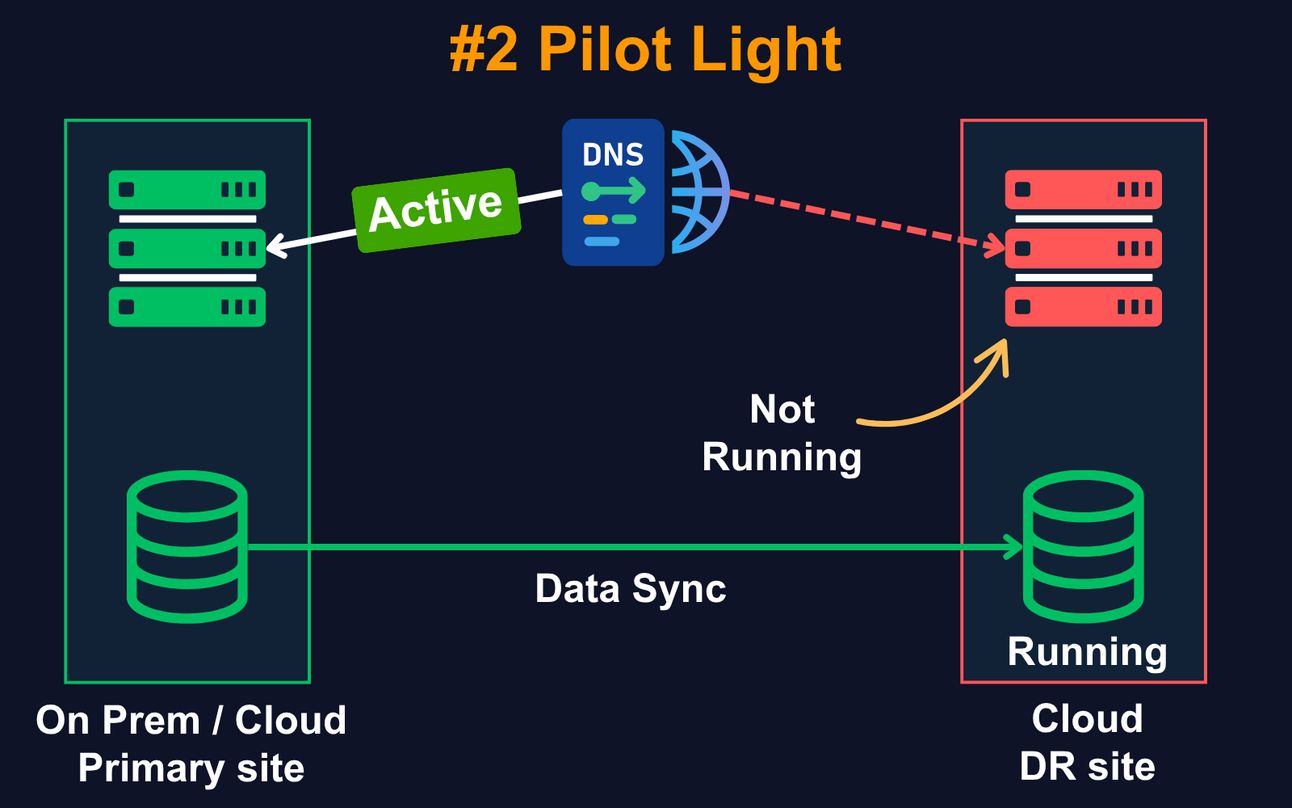

2. Pilot Light

In many real world DR designs, Pilot Light is the stage where teams begin balancing recovery speed with cost. You keep only the essential components running, and spin up the rest when a disaster occurs.

Typical RTO: Minutes to a few hours

Typical RPO: Depends on how frequently data is synchronized

I am giving away 50% OFF on all annual plans of membership offerings for a limited time.

A membership will unlock access to read these deep dive editions on Thursdays and Saturdays.

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archieve of 175+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session