- TechOps Examples

- Posts

- How AWS Cloud Traffic Routing Works

How AWS Cloud Traffic Routing Works

TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👋 👋 A big thank you to today's sponsor MINDSTREAM

Turn AI Into Extra Income

You don’t need to be a coder to make AI work for you. Subscribe to Mindstream and get 200+ proven ideas showing how real people are using ChatGPT, Midjourney, and other tools to earn on the side.

From small wins to full-on ventures, this guide helps you turn AI skills into real results, without the overwhelm.

👀 Remote Jobs

Alpaca is hiring a Senior DevOps Engineer

Remote Location: Worldwide

Toogeza is hiring a Platform/DevOps Engineer

Remote Location: Worldwide

📚️ Resources

Looking to promote your company, product, service, or event to 57,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

How AWS Cloud Traffic Routing Works

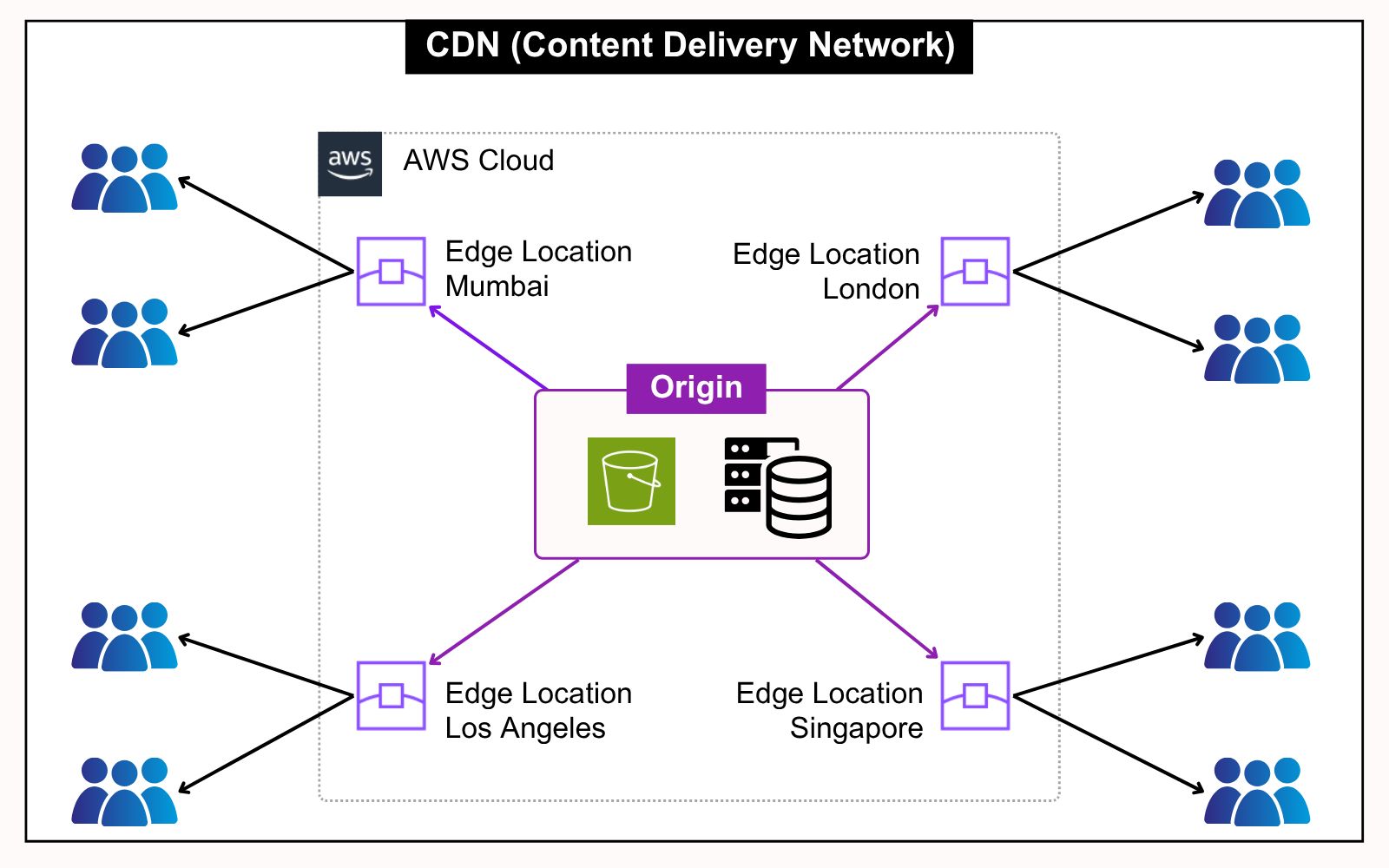

If you are using Netflix, Hotstar, or any OTT platform and the video starts instantly without buffering, you're already experiencing the power of a CDN at work.

A Content Delivery Network is a globally distributed system that helps deliver web content to users from the nearest possible location. It reduces load times, cuts latency, and improves performance.

At the heart of a CDN setup, there are two key components:

Origin:

This is where your main content lives. It could be a web server, a storage bucket like Amazon S3, or any backend system holding your files, APIs, images, or videos. The origin is the single source of truth for all your content.

Edge Locations:

These are geographically distributed servers that cache and serve content closer to the end users. When a user in London or Mumbai requests a file, instead of reaching all the way to the origin server in the US, the request is handled by the nearest edge location.

Here’s how it flows in real world usage:

User requests go to the nearest edge location.

If cached, content is served instantly.

If not, it's fetched from the origin, cached, and delivered.

Future requests from that region get it directly from the edge.

This setup not only speeds up delivery but also reduces the strain on the origin, saves bandwidth, and improves reliability during traffic spikes. Let’s say AWS for instance. It offers CloudFront as its CDN service, which improves performance by using edge locations to cache and serve content.

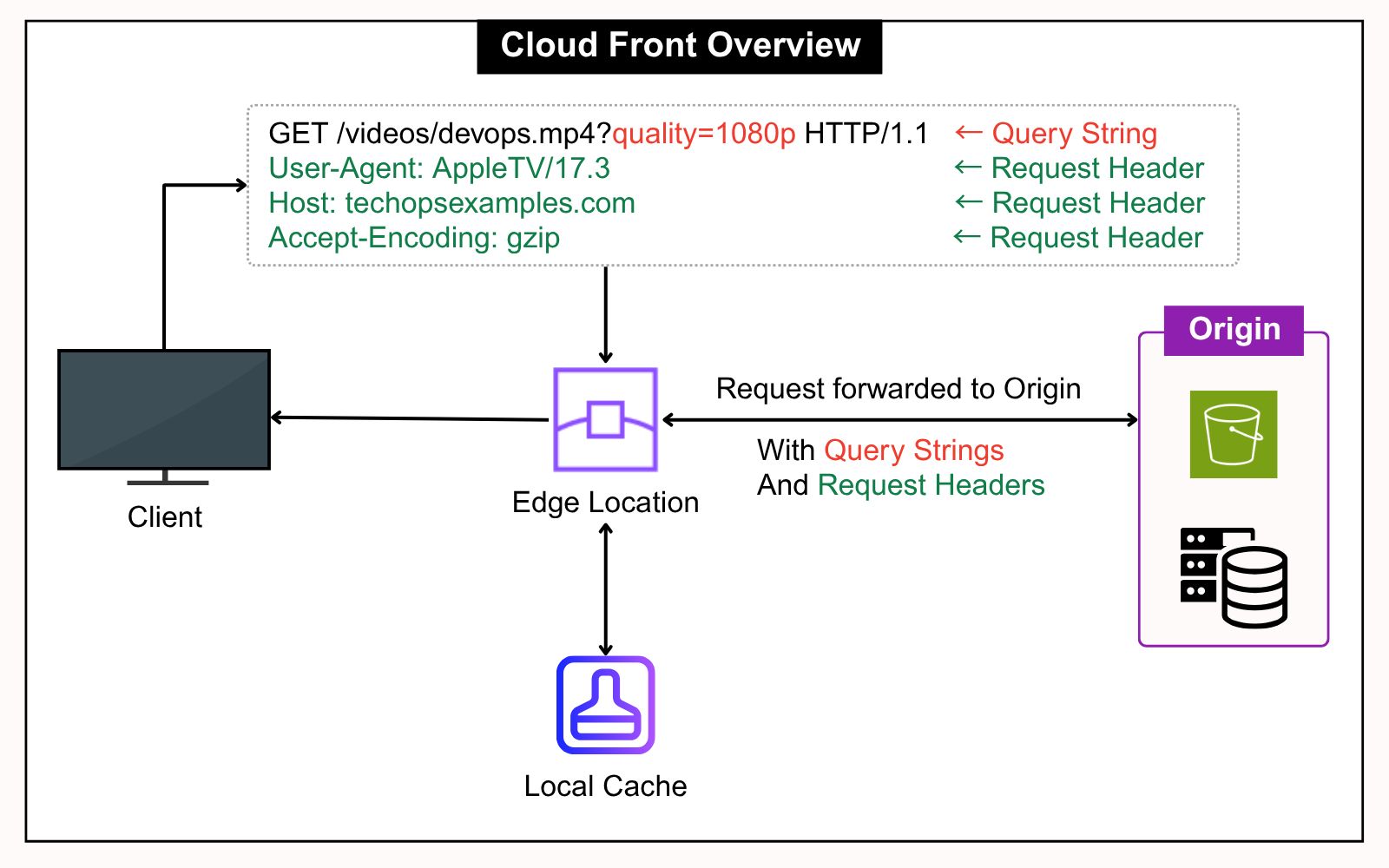

When a client sends a request, CloudFront checks the edge cache using the full HTTP request details. This includes the request path, query strings like ?quality=1080p, and headers such as User-Agent, Host, and Accept-Encoding.

These define the cache key. If there's no match, the CDN forwards the request to the origin, caches the response, and serves it. This speeds up delivery, adapts to device needs, and reduces origin load.

Routing policies then decide which origin to reach based on geography, latency, health, or traffic split.

Here are six traffic routing policies you’ll often see in production:

🔴 Get my DevOps & Kubernetes ebooks! (free for Premium Club and Personal Tier newsletter subscribers)

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

Already a paying subscriber? Sign In.

Paid subscriptions get you:

- • Access to archive of 250+ use cases

- • Deep Dive use case editions (Thursdays and Saturdays)

- • Access to Private Discord Community

- • Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- • Quarterly 1:1 'Ask Me Anything' power session