TechOps Examples

Hey — It's Govardhana MK 👋

Welcome to another technical edition.

Every Tuesday – You’ll receive a free edition with a byte-size use case, remote job opportunities, top news, tools, and articles.

Every Thursday and Saturday – You’ll receive a special edition with a deep dive use case, remote job opportunities, and articles.

👋 Before we begin... a big thank you to today's sponsor FELLOW APP

AI Notetakers Are Quietly Leaking Risk. Audit Yours With This Checklist.

AI notetakers are becoming standard issue in meetings, but most teams haven’t vetted them properly.

✔️ Is AI trained on your data?

✔️ Where is the data stored?

✔️ Can admins control what gets recorded and shared?

This checklist from Fellow lays out the non-negotiables for secure AI in the workplace.

If your vendor can’t check all the boxes, you need to ask why.

👀 Remote Jobs

EverOps is hiring a Lead, DevOps Engineer | Pod Lead

Remote Location: Worldwide

Toogeza is hiring a Platform/DevOps Engineer

Remote Location: Worldwide

📚 Resources

Looking to promote your company, product, service, or event to 50,000+ Cloud Native Professionals? Let's work together. Advertise With Us

🧠 DEEP DIVE USE CASE

AWS S3 Performance Optimization in a Production Environment

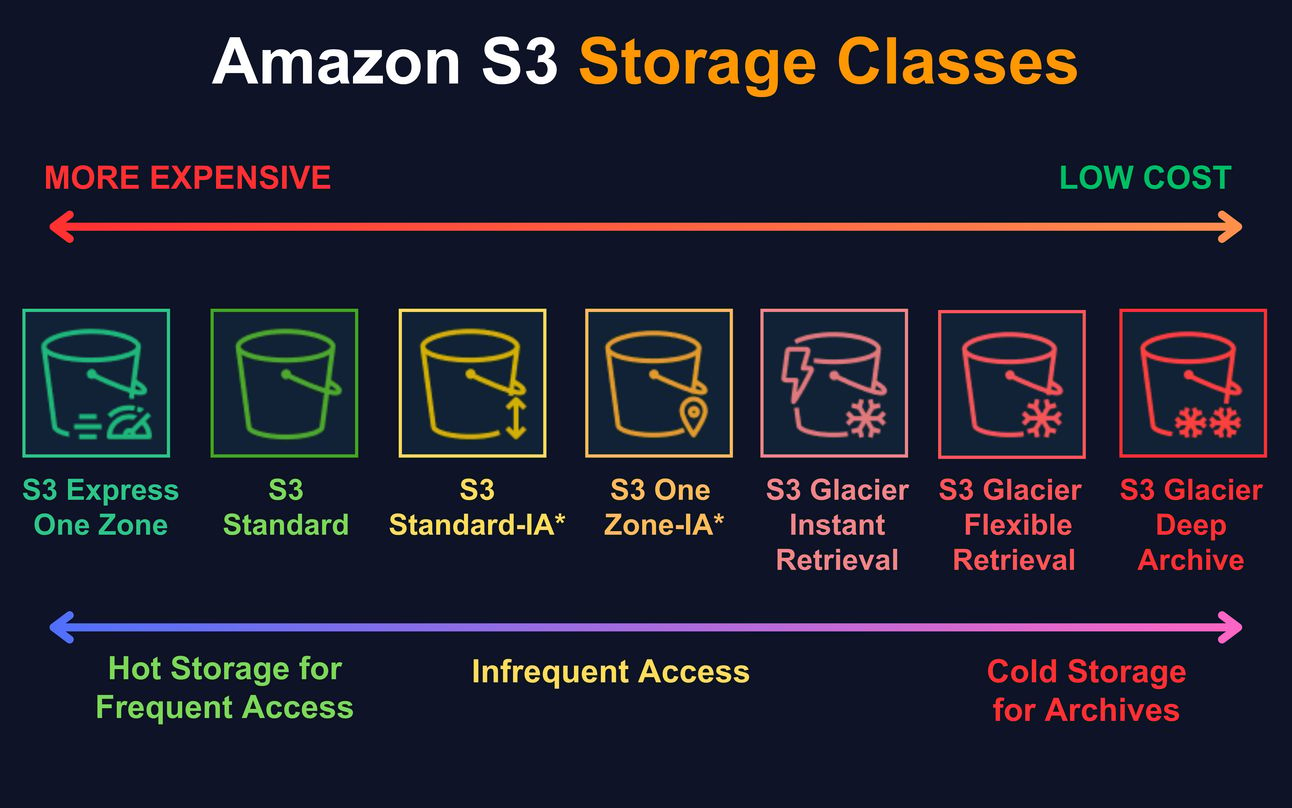

I see too often many cloud practitioners jumping straight into S3 performance tweaks without understanding storage classes. Picking the wrong class silently drains performance and money.

Here’s what each storage class actually offers:

S3 Express One Zone

Sub millisecond latency, over 10 GBps per prefix, single AZ, best for high performance compute or AI pipelines.S3 Standard

99.99 percent availability, multi AZ, millisecond latency, suitable for frequently accessed data.S3 Standard-Infrequent Access

99.9% availability, multi AZ, 30 day minimum storage, per GB retrieval charge, ideal for infrequently accessed but critical data.S3 One Zone-Infrequent Access

Same as Standard-IA but in one AZ, lower cost, 99.5% availability, use for secondary backups or recreatable data.S3 Glacier Instant Retrieval

Millisecond access, archival grade pricing, multi AZ, for active archives like medical or legal data.S3 Glacier Flexible Retrieval

Access in minutes or hours, cheaper than Instant, with options for expedited, standard, and bulk retrieval.S3 Glacier Deep Archive

Lowest cost, 12 to 48 hour retrieval, for long-term archives and compliance data.

On top of this, use S3 Intelligent Tiering to automatically move data across Frequent, Infrequent, Archive, and Deep Archive tiers based on actual access, with no retrieval fees and only pay per objects monitored.

With this basic understanding, let us look into the prime aspects of S3 performance optimization, such as:

Multi Part Upload

S3 Transfer Acceleration

S3 Select and Glacier Select

Pre signed URLs

1. Multi Part Upload

In production, uploading anything over 100 MB without multi part upload is asking for trouble. We’ve seen S3 PUT operations fail silently on large monolithic uploads, especially over unstable or throttled VPC endpoints.

In that case, always use the Multi Part Upload API. It breaks the file into parts and uploads them in parallel, improving speed, fault tolerance, and resource efficiency.

Field tested recommendations:

Set part size between 25 MB and 100 MB

Limit total parts to under 10,000 per object

Use 5 to 10 concurrent threads for uploads from EC2

Pre calculate part count for files over 100 GB

Monitor CompleteMultipartUpload metrics. Spikes indicate backend retries or IOPS saturation.

In VPC or low bandwidth setups, avoid aggressive concurrency. Adjust part size and threads based on network. Use --checksum-algorithm SHA256 if consistency matters.

2. S3 Transfer Acceleration

A common misconception is that enabling Transfer Acceleration will always make S3 uploads faster. In reality, it helps only when data is uploaded over long geographic distances.

For same region or intra VPC uploads, it often adds no benefit and just increases cost.

I am giving away 50% OFF on all annual plans of membership offerings for a limited time.

A membership will unlock access to read these deep dive editions on Thursdays and Saturdays.

Upgrade to Paid to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

UpgradePaid subscriptions get you:

- Access to archieve of 175+ use cases

- Deep Dive use case editions (Thursdays and Saturdays)

- Access to Private Discord Community

- Invitations to monthly Zoom calls for use case discussions and industry leaders meetups

- Quarterly 1:1 'Ask Me Anything' power session